Science Highlights, January 18, 2016

Awards and Recognition

Capability Enhancement

Prototype proves design concepts for plutonium electromagnetic isotope separator

Materials Science and Technology

First characterization of the lateral atomic structure of deformation twins in magnesium

Awards and Recognition

Travis Tenner wins DHS Early Career Award

Travis Tenner (Nuclear and Radiochemistry group, C-NR) has received an Early Career Award from the Department of Homeland Security (DHS) for research in technical nuclear forensics. The award aims to encourage research in the field of nuclear forensics and to help young researchers become established in this vital national security research area. Projects can focus on either pre- or post-detonation science. An interagency panel selects awardees. The research award is $300 K a year for two years.

Tenner’s research project began in 2017 and is titled, “Optimizing the characterization of predetonation material and post-detonation debris particles by LG-SIMS for rapid-screening nuclear forensic analysis.” Determining actinide isotope ratios from pre-detonation material and postdetonation debris particles can reveal important data for nuclear forensic investigators. This capability can be applied to interdicted materials, failed nuclear device scenarios, radiological dispersal devices, and post-nuclear detonation debris samples. In addition, light-isotope ratios of post-detonation debris particles (e.g., high-explosive soot) from an radiological dispersal device or failed nuclear device are useful because expected fractionations can reveal certain performance characteristics of the device. However, it is not currently well understood if secondary ion mass spectrometry (SIMS) as a rapid screening technique has the fidelity to distinguish isotope signatures from individual particles.

Photo. LANL’s LG-SIMS is one of the few devices of its kind in the DOE complex that can be used to examine radiological samples.

Tenner will use LANL’s large geometry SIMS (LG-SIMS) to investigate and document sample preparation techniques that control the dispersion of uranium and graphite reference materials onto a sample surface. He and his co-workers will evaluate which conditions of sample dispersion and SIMS ion beam characteristics provide the best balance of isotope image fidelity and analytical precision. The overall goal of the project is optimal, rapid, isotope screening of “real-world” pre-detonation and post-detonation particles consisting of uranium-bearing and/or graphitic materials.

The Lab purchased the LG-SIMS instrument using Institutional Investment funds for use in a wide variety of programs. The capability to examine radiological samples in a SIMS instrument opens new research avenues.

Tenner began working with secondary ion mass spectrometry while he was a graduate student at the University of Minnesota (Ph.D., Geology). As an experimental petrologist, he synthesized terrestrial mantle analogues and analyzed them by SIMS to determine the influence of trace amounts of water on the properties of Earth’s interior. Tenner worked as a postdoc, then a research scientist at the University of Wisconsin – Madison, where he used SIMS to investigate the isotope characteristics of meteorites to explore the origin and evolution of the Solar System.

In 2015, Tenner joined LANL as a research scientist, applying his accumulated SIMS knowledge to the study of nuclear forensics. Because SIMS yields data with high spatial resolution, demonstrating its ability to determine the isotope characteristics of environmental samples is significant. Technical contact: Travis Tenner

Jaqueline Kiplinger given Distinguished Alumna Award

Jaqueline Kiplinger, a Laboratory Fellow in the Inorganic, Isotope and Actinide Chemistry Group (C-IIAC), received the University of Utah Department of Chemistry Distinguished Alumna Award at a special ceremony in Salt Lake City, UT. The award was established in 2011 to recognize and honor the most prominent and successful undergraduate, graduate, and postdoctoral alumni from the Department. Kiplinger received a Ph.D. in Organometallic Fluorocarbon Chemistry from the University of Utah. During her visit, she met with faculty and students and gave several invited seminars, including a retrospective sharing of memories as a graduate student and some reflections on her career since that time.

Photo: Jaqueline Kiplinger (left) with Department of Chemistry Chair and Distinguished Professor Cynthia Burrows.

Kiplinger joined LANL as the first Frederick Reines Postdoctoral Fellow in 1999, and became as a Technical Staff Member within Chemistry Division in 2002. She is an internationally recognized leader in f-element chemistry. Kiplinger is a Fellow of the American Association for the Advancement of Science, the Royal Society of Chemistry, and the American Institute of Chemists. A Laboratory Fellows Prize for Research, two R&D 100 Awards, three mentoring awards, and several LANL/NNSA Best-in-Class Pollution Prevention Awards have recognized her scientific achievements. In 2015, Kiplinger became the first woman to receive the F. Albert Cotton Award in Synthetic Inorganic Chemistry from the American Chemical Society. She is also the first scientist at the Lab to have been honored with two national-level ACS awards, the first being the 1998 Nobel Laureate Signature Award in Chemistry. Technical contact: Jaqueline Kiplinger

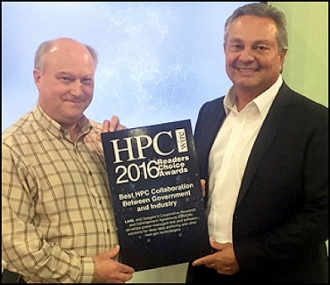

High Performance Computing honored for industry collaboration in HPCwire Awards

LANL’s High Performance Computing Division (HPC-DO) was recognized with an HPCwire Readers’ and Editors’ Choice Award for HPC’s collaboration with Seagate on next-generation data storage technologies. HPC Division Leader Gary Grider accepted the award at the 2016 International Conference for High Performance Computing, Networking, Storage and Analysis (SC16), in Salt Lake City, UT. All winners were revealed at the HPCwire booth at the event and on the HPCwire website.

Photo. (Left): Gary Grider (Division Leader for High Performance Computing) accepts the HPCwire Readers’ Choice award from Tom Tabor (CEO of Tabor Communications, publisher of HPCwire). Photo courtesy of HPCwire

The Readers’ Choice award was given for best HPC Collaboration Between Government and Industry. HPC and Seagate’s Cooperative Research and Development Agreement (CRADA) develops power-managed disk and software solutions for deep data archiving and other next-generation technologies. John Sarrao, Associate Director for Theory, Simulation and Computation, said that engagement with industry has been a cornerstone of their high performance computing efforts for many years and that it is an honor to be partnering with Seagate in advancing the frontiers of storage.

As part of its national security science mission, the Laboratory and HPC have a long, entwined history dating back to the earliest days of computing and Los Alamos holds many “firsts” in HPC breakthroughs. Today, supercomputers are integral to stockpile stewardship. Learn more about LANL’s HPC efforts.

HPCwire is regarded as the #1 news and information resource covering the fastest computers in the world and the people who run them. The annual HPCwire Readers’ and Editors’ Choice Awards are determined through a nomination and voting process with the global HPCwire community, as well as selections from the HPCwire editors. The awards are an annual feature of the publication and constitute significant recognition from the HPC community. They help kick off the annual supercomputing conference, which showcases high-performance computing, networking, storage and data analysis.

The work supports the Lab’s Nuclear Deterrence mission area and the Information, Science and Technology science pillar. Technical contact: Gary Grider

Sebastian Deffner awarded Leon Heller Postdoc Publication Prize

The Laboratory presented the Leon Heller Postdoc Publication Prize in Theoretical Physics to Sebastian Deffner, previous Director’s Postdoc Fellow in the Physics of Condensed Matter and Complex Systems Group (T-4). The award recognized Deffner for his paper published in Physical Review Letters.

The publication reports seminal work on the quantum thermodynamics of nanosystems. Deffner solved a difficult scientific problem and showed that the quantum Jarzynski equality generalizes to PTsymmetric quantum mechanics with unbroken PT symmetry. He illustrated these findings for an experimentally relevant system of two coupled optical waveguides. The publication also inspired experimentalists through a collaboration with the University of Maryland on micro-cavity quantum electrodynamics. Other experimental groups are now trying to observe the approach to broken PT symmetry predicted by Deffner. Avadh Saxena (T-4 Group Leader) nominated Deffner for the Prize.

Reference: “Jaryzynski Equality in PT-Symmetric Quantum Mechanics,” Physical Review Letters 114, 150601 (2015); doi: 10.1103/PhysRevLett.114.150601. Authors: Sebastian Deffner and Avadh Saxena (T-4).

Deffner received a Ph.D. in Physics from the University of Augsburg. He worked as a Director’s Postdoc Fellow at the Lab from 2014 – 2016. Deffner is now an Assistant Professor of Physics at the University of Maryland – Baltimore County.

Dr. Leon Heller established the Postdoctoral Publication Prize in Theoretical Physics (1976), which is endorsed by the Laboratory and administered by the Lab’s Postdoc Program Office. The winning publication describes work performed primarily during the tenure of the postdoctoral appointment. The Heller consists of an award certificate and a monetary award, which Heller personally funds. The award presentation provides the winner with the opportunity to present the published work at a Physics/Theoretical (P/T) Colloquium. Technical contact: Mary Anne With

Bioscience

Next-Gen Bioinformatics for the masses

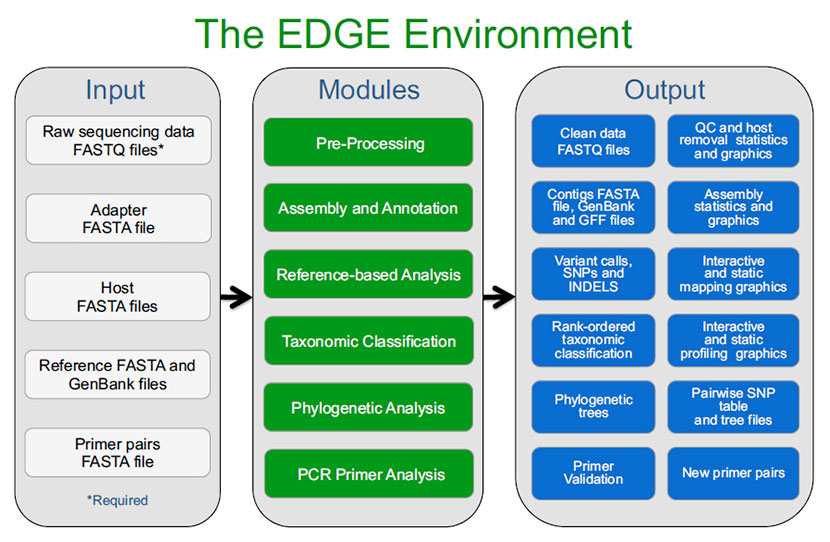

Many areas of modern biology rely on next generation sequencing to answer key questions. Examples include: 1) the effects and feedback of climate change on microbial communities in all types of environmental ecosystems, 2) how best to utilize plants or algae to solidify our national energy security, and 3) how to better help stop the spread of disease from both naturally occurring and manmade threats. The ability to characterize organisms through accurate and rapid sequencing of their genomes and compare them to known organisms is an important part of the Lab’s national security mission. Bioscience Division researchers and collaborators have developed a new bioinformatics platform called “Empowering the Development of Genomics Expertise” (EDGE), which makes genomic analysis fast, reliable, and accessible to more researchers than ever before. The journal Nucleic Acids Research published the report.

Over the last decade, next-generation sequencing instruments have become more widespread and accessible to the average biologist or physician. However, sophisticated bioinformatics tools are required to process and analyze the data. Public, open-source tools are available, but determining which tools to use when, and how, in order to answer specific questions about genomic data requires a significant level of expertise.

To mitigate this problem, Patrick Chain (Biosecurity and Public Health, B-10) and his colleagues developed EDGE, an open source platform that integrates hundreds of publicly available tools that process Illumina sequence data. The key is that EDGE provides access to the tools in a very user-friendly web environment with ready-to-run pipelines to assemble, annotate, and compare genomes as well as characterize complex samples from clinical or environmental settings. These consist of pipelines to perform many of the standard queries scientists ask of their samples or methods, such as: 1) quality control of the sequencing data, 2) assembly of the various sequence pieces in order to reconstitute the full genome of an organism, 3) determining the sequence differences between closely related organisms, 4) identifying which bacterial, archaeal, and viral organisms may be present within a complex clinical or environmental sample, 5) determining evolutionary relationships among a number of strains of the same species, and 6) examining or developing detection or diagnostic assays.

Figure 1. An overview of the EDGE Bioinformatics Environment. The only inputs required from the user are raw sequencing data and a project name. The user can create specific workflows with any combination of the modules. In addition, the user can modify tailored parameters dictating how each module functions. EDGE outputs a variety of files, tables and graphics, which can be viewed on screen or downloaded.

Because such analyses can be very complex and require the sequential use of many different bioinformatics tools, these preconfigured EDGE pipelines, or workflows, can reduce data analysis times from days or weeks to minutes or hours. Moreover, EDGE also provides graphics and interactive visualization of the results to improve comparisons and analysis. With the simple interface, a user with little to no bioinformatics experience can obtain useful results from very large sequencing data files. EDGE Bioinformatics has already helped streamline data analysis for groups in Thailand, Georgia, Peru, South Korea, Gabon, Uganda, Egypt and Cambodia, as well as several government laboratories in the United States.

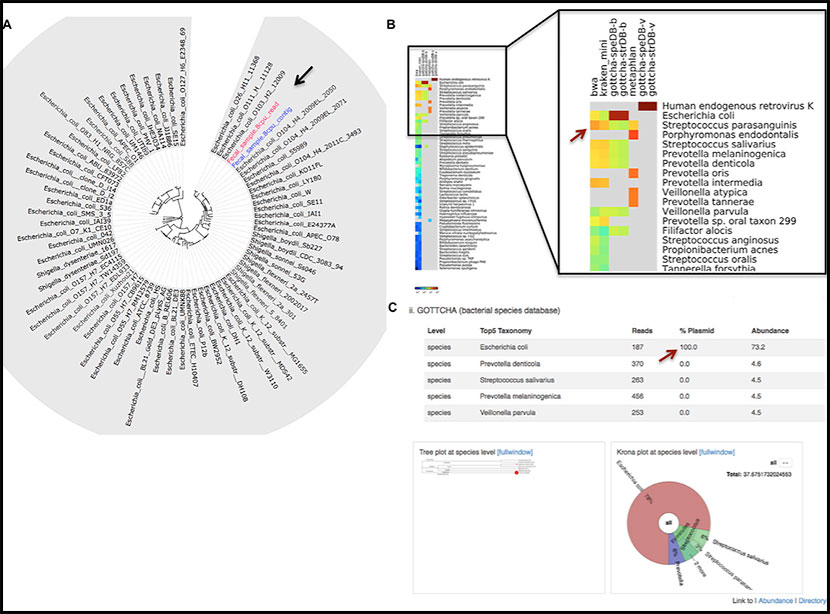

Figure 2. Phylogenetic and taxonomic analysis of human clinical samples with suspected and unknown causative agents. (A) Circular phylogenetic tree clearly places within the E. coli O104 group both the raw reads and the contigs obtained from a clinical fecal sample. (B) A comparative heatmap view of identified taxa from a nasal swab sample demonstrates the abundance of typical nasal cavity organisms. (C) The E. coli identified with GOTTCHA in the nasal swab sample (in B) is described in greater detail under the tool-specific EDGE view (red arrow), showing the percent of hits to plasmids for each identified taxon; below are a taxonomic dendrogram featuring the taxa detected with circles representing relative abundance, and a Krona plot view of the same data.

Reference: “Enabling the Democratization of the Genomics Revolution with a Fully Integrated Web-based Bioinformatics Platform,” Nucleic Acids Research 45, 67 (2017); doi: 10.1093/nar/gkw1027. Authors: Po-E Li, Chien-Chi Lo, Karen W. Davenport, Yan Xu, Sanaa Ahmed, Shihai Feng, and Patrick S. G. Chain (B-10); Joseph J. Anderson (Defense Threat Reduction Agency and Naval Medical Research Center); Kimberly A. Bishop-Lilly (Naval Medical Research Center and Henry M. Jackson Foundation); and Vishwesh P. Mokashi (Naval Medical Research Center).

The Defense Threat Reduction Agency funded the research, which supports the Lab’s Global Security and Energy Security mission areas and the Science of Signatures science pillar through rapid genomic sequencing of organisms. Technical contact: Patrick Chain

Capability Enhancement

Prototype proves design concepts for plutonium electromagnetic isotope separator

Photo. Chris Leibman (Materials Synthesis and Integrated Devices, MPA-11) operates the electromagnetic separator.

Los Alamos researchers in collaboration with external colleagues have developed and tested a high-throughput electromagnetic isotope separator (EMIS) capable of producing gram-scale quantities of high purity isotopes in a single day. They have successfully created indium-113 with 99.9975% purity in a single pass and exceeded the researchers’ expectations for resolution and separation quality. The primary purpose of this EMIS is to serve as a prototype for ion source and instrument modifications to mitigate risks associated with a similar instrument that will be used for isotopic refinement of the nation’s plutonium- 242 (Pu-242) inventory.

Pu-242 is an isotope of plutonium that is chemically identical to but approximately sixteen times less radioactive than the fissile isotope Pu-239, which is used in nuclear weapons. Concerns surrounding nuclear criticality and nuclear weapons proliferation are significantly reduced with Pu-242, which makes it an ideal resource for accelerating the plutonium science and engineering work at the Laboratory. This can be accomplished by enabling the strategic use of lower hazard/security category radiological facilities and by bringing a broader suite of technical capabilities, personnel and instrumentation to bear on plutonium-related problems.

The isotopic purity (greater than 90%) of the existing Pu-242 inventory is quite attractive for use. However, it is contaminated with enough other highly radioactive isotopes (e.g. americium-241 and plutonium-238) that using it in its current form is problematic. Refining the inventory to a Pu-242 content of 99.9+% would allow researchers to use up to 600 g in existing radiological facilities. Given the performance and throughput realized by the prototype instrument, achieving the necessary purity levels is within reach. The team plans to complete the design of a plutonium separator within a year, and to manufacture a dedicated machine about a year after that.

Lab researchers include Chris Leibman, Jon Rau, and Kevin Dudeck (Materials Synthesis and Integrated Devices, MPA-11); Ilia Draganic and Larry Rybarcyk (Accelerators and Electrodynamics, AOT-AE); Tyler Bronson and James Jurney (Manufacturing Science and Engineering, MET-2). LANL staff in Mechanical Design Engineering (AOT-MDE: Brandon Roller, Mario Pacheco, Jason Burkhart, Manuel Soliz, David Ballard, Harry Salazar, and David Ireland), logistics and electrical safety (LOG-SUP, LOG-HERG, LOG-CS), and information protection (SAFE-IP) supported the execution of the work.

NNSA Readiness in Technical Base and Facilities (RTBF) enabled procurement of the separator, and the NNSA plutonium sustainment program helped advance the source design and testing. The work supports the Laboratory’s Nuclear Deterrence mission area and the Materials for the Future science pillar. Technical contacts: Chris Leibman and Jon Rau

Chemistry

Converting sugars into hydrocarbon fuels

Figure 3. The journal cover shows a concept of using biomass to derive fuel and fuel molecules through simple catalytic transformations. Cover image by Josh Smith, LANL.

A Los Alamos team is investigating methods to convert biomass into fuels. Sources of fossil-based hydrocarbons, such as heavy oil, shale gas, and oil sands, have helped address the decline of global fossil fuels production. Because these are finite resources, sustainable and renewable alternatives to petroleum are needed to fill future gaps in the supply of transportation fuels and chemical feedstocks. Renewable, non-food-based biomass is considered to be one of the most promising alternatives for the production of fuels and commodity chemicals. The Lab researchers are developing a mild route starting from simple sugars to prepare branched alkanes suitable for diesel fuel. Chemistry & Sustainability (ChemSusChem) published the research and featured it on the journal cover.

Biomass conversion methods typically focus on cellulosic biomass (i.e., the monosaccharides sugars glucose and xylose) into fuels and chemical products through chain extension steps and hydrodeoxygenation to remove the abundant oxygen atoms required for the synthesis of fuels. Drop-in, like-for-like replacements would permit facile integration with current infrastructure and would facilitate routes to certification for use in engines and fuel pumps. Some residual oxygen can be beneficial, but the over-abundance of oxygen present in biomass is detrimental to their use as high energy density fuels (i.e., they are over oxidized from a combustion standpoint), and these classes of substrates exhibit reactivity that is often difficult to predict and control. Reducing the oxygen content helps to confer stability and increases energy density by reducing carbon-oxygen (C−O) bonds to carbon-hydrogen (C−H bonds), while minimizing the yield of CO2 as a by-product. In contrast to thermochemical routes, a catalytic approach, using selective functional group transformations, could facilitate lower energy routes to access hydrocarbons.

The Lab team has succeeded in converting sugars into linear alkanes containing between 8 and 16 carbon atoms through selective aldol chain extension of 5-hydroxymethylfurfural (5-HMF) followed by ring-opening and hydrodeoxygenation. This process allowed them to isolate hydrocarbons in up to 95 % yield. The use of bio-derived furans, such as 5-HMF, has also been exploited by others to produce alkanes, and cellulose has been used directly to make pentane and hexane. The team has eliminated some processing steps and is moving towards “raw biomass” as the primary process input, rather than commodity chemicals produced from biomass.

Reference: “The Conversion of Starch and Sugars into Branched C10 and C11 Hydrocarbons,” Chemistry & Sustainability (ChemSusChem) 9, 2298 (2016); doi: 10.1002/cssc.201600669. Researchers: Andrew D. Sutton and John C. Gordon (Inorganic, Isotope and Actinide Chemistry, C-IIAC), Jin K. Kim, Ruilian Wu, Caroline B. Hoyt, Louis A. Silks, III (Bioenergy and Biome Sciences, B-11); and David B. Kimball (Actinide Engineering and Science, MET-1).

The project began in the Laboratory Directed Research and Development (LDRD) program, and the DOE Office of Energy Efficiency & Renewable Energy (EERE) Bioenergy Technology Office later funded the concept. The work supports the Lab’s Energy Security mission area and the Materials for the Future science pillar through the development of methods to convert renewable biomass to fuels. Technical contacts: Andrew Sutton and John Gordon

Earth and Environmental Sciences

LANL and industrial partners develop clean energy technologies

The first DOE-wide round of funding through the Technology Commercialization Fund (TCF) selected two projects involving Los Alamos researchers from Earth and Environmental Sciences (EES) Division in collaboration with industrial partners. The TCF is administered by DOE’s Office of Technology Transitions (OTT), which works to expand the commercial impact of DOE’s portfolio of research, development, demonstration and deployment activities. The funding aims to help businesses move promising clean energy technologies from DOE’s National Laboratories to the marketplace. These TCF selections will expand DOE’s efforts to catalyze the commercial impact of today’s portfolio of research, development, demonstration, and deployment activities to increase return-on-investment from federally funded research, and to give more Americans access to cutting-edge energy technologies. All projects selected for the TCF will receive an equal amount of non-federal funds to match the federal investment. The projects at Los Alamos support the Lab’s Energy Security mission area by expanding the options for energy production while minimizing the impact on the environment. The LANL projects are described in the following sections.

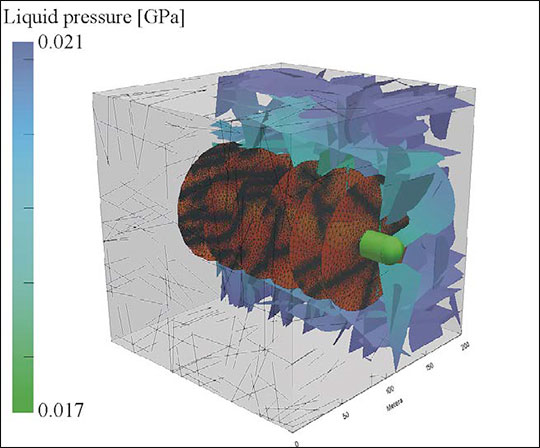

FracMan/dfnWorks: From Geological Fracture Characterization to Multiphase Subsurface Flow and Transport Simulation

Figure 4. A discrete fracture network based on a geological characterization of a shale formation generated using dfnWorks. A horizontal well (green cylinder) is included in the domain. Hydraulic fractures (brown discs) increase connectivity of the well with the natural fracture network. The background fractures are colored according to the steady-state pressure solution that is used to simulate transport.

Extensive geothermal energy resources remain untapped due to the inability to locate, predict, and sustain engineered fracture flows. Carbon dioxide (CO2) sequestration remains a challenge dependent upon confirming that injected CO2 will remain in the subsurface rather than leak through fractures to the surface. High-level nuclear waste disposal must quantify long-term radionuclide transport pathways and retention in networks of natural fractures. All these applications involve rock masses where fractures are the primary conduits of flow and transport, and there is significant geomechanical and hydrodynamic interaction with the rock matrix.

The researchers plan to demonstrate the feasibility of improving the commercialization of the DFN approach. They will combine FracMan’s proven, geologically and geomechanically based, data driven analyses with the next generation computational power of LANL’s dfnWorks. Data sources will include fracture image logs, geological mapping and interpretation, 2-D, 3-D, and 4-D seismic processing, and hydrodynamic, geomechanical, geochemical, and tracer testing.

Technical contact: Nataliia Makedonska

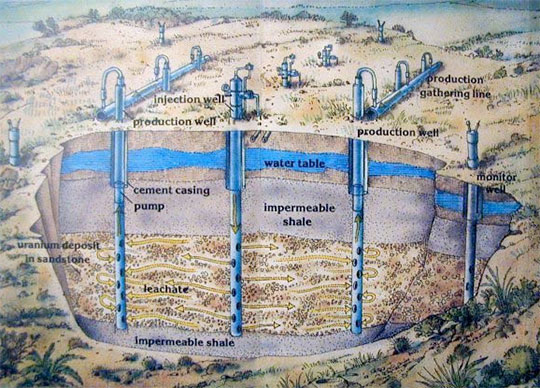

Efficient groundwater restoration at uranium in-situ recovery sites to enable domestic uranium production for nuclear energy

Uranium is used to fuel nuclear reactors that account for nearly 20% of U.S. electrical generation capacity without producing significant carbon emissions. About 90% of U.S. uranium production and over half of production worldwide are now accomplished by the in-situ recovery (ISR) process. ISR is a leach mining method that is considered to be safer to workers and more environmentally friendly than conventional uranium mining because there are no major ground disturbances and no exposures to uranium ore or mill tailings. This method generally costs less than conventional mining.

Figure 5. Cutaway depiction of a uranium in-situ recovery (ISR) operation. Credit: Uranium Producers Association

During ISR mining, water that is fortified with oxygen and carbon dioxide (or baking soda) is injected into the uranium ore zone to oxidize and solubilize uranium. The water is then pumped to the surface and recovered in ion-exchange columns. After mining, environmental regulations prescribe that the water in the ore zone be restored to its pre-mining quality. The industry standard method of groundwater restoration is to extract the leaching solution from the ore zone by drawing in water from outside the zone and then using the same injection and production wells as in mining to circulate ore zone water through reverse osmosis units to “polish” the water. This method is effective at reducing dissolved solids concentrations in the water, including concentrations of all contaminants. However, it does not restore the ore zone solids to their original state (sequestration of uranium and other elements in an ore zone).

As a result, the concentrations of uranium and other contaminants in the ore zone water tend to rebound over time. This rebound in concentration makes it difficult to demonstrate stability of restored ore zone groundwater quality. If stability cannot be demonstrated, current draft EPA regulations could require monitoring of groundwater around the ore zone for up to 30 years to show that water outside the aquifer exemption boundary granted for mining is not being impacted. Long-term monitoring or remedial actions could severely impact the economic viability of uranium ISR mining in the U.S.

The proposed method will address the restoration of the ground water and also restoration of the ore zone aquifer solids to establish more stable long-term geochemical conditions. The process will use a unique combination of tracer chemicals to identify flow pathways between injection and production wells in the ore zone. This information will enable optimization of remediant introduction and groundwater chemistry manipulations to increase the effectiveness of the introduced remediants. The phased introduction of both chemical remediants and biostimulants could achieve more effective restoration than either type of remediant by itself. The method may have the added benefit of reducing water consumption during ISR restoration. Technical contact: Paul Reimus

Materials Physics and Applications

Advances in metasurfaces

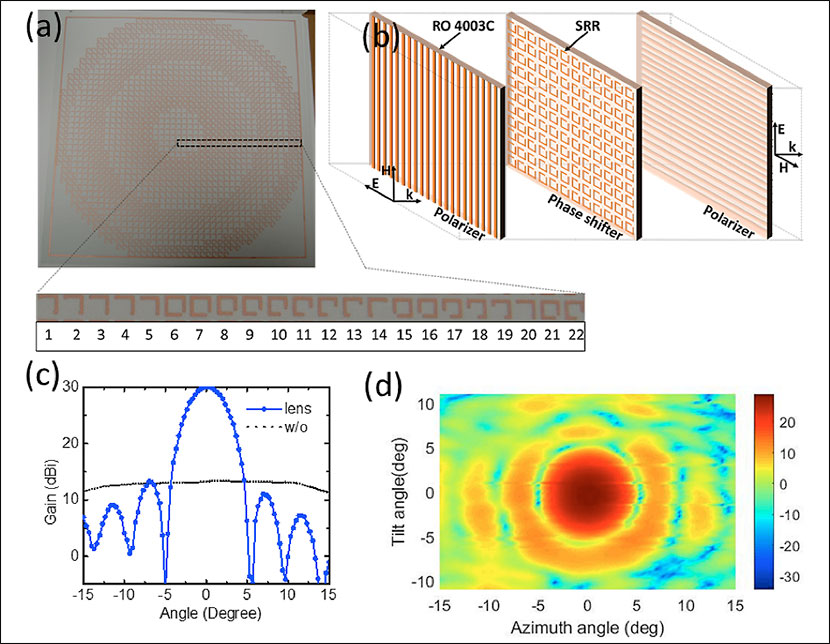

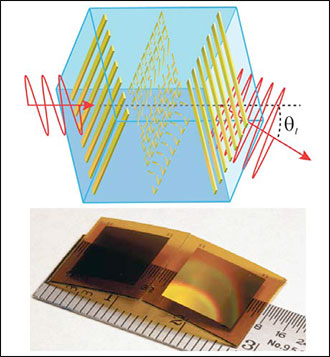

Metamaterials are artificially engineered media that have allowed the demonstration of many exotic electromagnetic phenomena, including negative refractive index, electromagnetic cloaking, and super-resolution lens. Although bulk metamaterials pose severe fabrication challenges, planar metamaterial architectures – metasurfaces – offer alternative avenues to accomplish desirable functionalities. The metasurfaces consist of a single layer or a few layers of a planar array of subwavelength metal/dielectric resonators arranged in a particular spatial profile to manipulate electromagnetic waves. These structures allow independent control of the amplitude, phase, polarization, and propagation of electromagnetic waves via tailoring the design parameters of the constituent subwavelength resonators. Metasurfaces have demonstrated unprecedented capability to manipulate electromagnetic waves, enabling unique electromagnetic phenomena including anomalous refraction/reflection, near-unity absorption, polarization conversion, flat lensing, and electromagnetic phase-shifting. Compared with bulk metamaterials, metasurfaces are much easier to fabricate, operate over a broader bandwidth and with lower losses, and are more compact and suitable for integration in many practical applications.Papers published in Scientific Reports and Reports on Progress in Physics describe recent advances.

Metasurfaces transitioning to applications

1) Rationally-designed metasurfaces would allow high absorption in the entire solar spectrum, but emit light only at a narrow spectral window matching the bandgap of photovoltaic materials. This unique property could be applied to an intermediate photon-management system to enhance the efficiency of solar thermo-photovoltaics dramatically. The team has made progress by demonstrating an ultra-thin metasurface with the unit cell consisting of eight pairs of gold nanoresonators separated from a gold ground plane by a thin silicon dioxide spacer. The researchers showed that the metasurface can function as a polarization independent, omnidirectional, and broadband solar absorber, with greater than 90% absorption in the visible and near-infrared wavelength range, and exhibiting low absorptivity (emissivity) at mid- and far-infrared wavelengths. These desirable properties could be used in solar thermo-photovoltaics. The relatively simple design of the absorber allows scale up to large area fabrication using convention nano-imprint lithography.

2) The team developed a metasurface-based ultrathin flat lens antenna operating at microwave frequencies. The metasurface lens antenna is composed of three thin layers of metallic structures: an array of subwavelength resonators sandwiched by two orthogonal gratings with 5-mm interlayered air spacing. The structure provides a parabolic phase distribution in the radial direction to function as a lens antenna. The antenna demonstrated excellent focusing/collimating of broadband microwaves from 7.0-10.0 GHz, with an absolute gain enhancement of 18 dBi (decibels relative to isotropic) at the central wavelength of 9.0 GHz. The metasurface lens antenna could enable high gain, broadband, lightweight, low-cost, and easily deployable flat transceivers for microwave communication. It might overcome the severe limitations of conventionally used parabolic reflectors and dielectrics lenses for beam collimation or focusing.

Figure 7. Design and characterization of the microwave metasurfaces flat lens. (a) Optical image of the fabricated metasurfaces layer that provides the required phase distribution for focusing the microwave beam. Inset shows the radial metallic resonators from center to edge. (b) The schematics of all three layers of the lens. (c) Measured far field gain of the flat lens antenna while fed by a horn antenna (blue) and the gain of a horn antenna without the lens (black). (d) Measured beam profile at the focus shows a dominant main lobe accompanied by side lobes.

Reference: “Metasurface Broadband Solar Absorber,” Scientific Reports 6, 20347 (2016); doi: 10.1038/srep20347. Authors: Abul Azad, Anatoly Efimov, and Hou-Tong Chen (Center for Integrated Nanotechnologies, MPA-CINT); Diego Dalvit and Wilton Kort-Kamp (Physics of Condensed Matter and Complex System, T-4); Milan Sykora (Inorganic Isotope and Actinide Chemistry, C-IIAC); Antoinette J. Taylor (Associate Directorate for Chemistry, Life, and Earth Sciences, ADCLES); Nina Weisse-Bernstein (Space and Remote Sensing, ISR-2); and Ting. S. Luk (Sandia National Laboratories – CINT).

The research benefited from the fabrication and characterization capabilities in the Center for Integrated Nanotechnologies (CINT), a DOE Office of Science national user facility. The Laboratory Directed Research and Development (LDRD) program funded the work, which supports the Lab’s Energy Security mission area and the Emergent Phenomena theme in the Materials for the Future science pillar. The innovations of this research address key science issues encountered in the development of future photonic technologies, energy harvesting, and lightweight communication devices. Technical contact: Abul Azad

Researchers survey the current state of metasurfaces

Figure 8. (Top): Schematic of a tri-layer terahertz metasurface structure (not drawn to scale) consisting of two orthogonal gold gratings and an array of anisotropic subwavelength gold resonators of various geometries, which were embedded within a polyimide film. (Bottom): Photograph of the fabricated metasurface devices that were used to enable highly efficient linear polarization conversion over a broadband frequency range, and to control the scattering phase for arbitrary wave front engineering such as a flat lens for beam focusing.

Hou-Tong Chen (MPA-CINT) and his collaborators are designing and fabricating functional metamaterials to transform the technological world, with applications in integrated optics, renewable energy, sensing, imaging, and communications. They have pioneered in developing actively switchable and frequency tunable terahertz metasurfaces. The researchers have made significant contributions in developing and understanding few-layer metasurfaces for perfect absorption, antireflection, and polarization conversion. Recent advances include the successful demonstration of highly efficient metasurface flat lenses operating at microwave and terahertz frequencies, and ultra-broadband metasurface solar absorbers toward high-performance solar thermophotovoltaic applications.

Reference: “A Review of Metasurfaces: Physics and Applications,” Reports on Progress in Physics 79, 076401 (2016); doi: 10.1088/0034-4885/79/7/076401. Authors: Hou-Tong Chen (MPA-CINT), Antoinette J. Taylor (ADCLES), and Nanfang Yu (Columbia University). The Laboratory Directed Research and Development (LDRD) program funded Chen. His work was performed in part at the Center for Integrated Nanotechnologies (CINT), a U.S. DOE, Office of Basic Energy Sciences Nanoscale Science Research Center operated jointly by Los Alamos and Sandia National Laboratories. The work supports the Laboratory’s Energy Security mission area and the Materials for the Future science pillar. Technical contact: Hou-Tong Chen

Materials Science and Technology

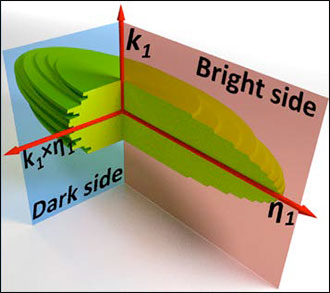

First characterization of the lateral atomic structure of deformation twins in magnesium

Hexagonal close-packed materials (hcp) are widely used in nuclear, aeronautic, automobile, and defense technologies. A Los Alamos-led team has combined electron microscopy and atomistic simulations to shed light on the previously uncharted – and crystallographically unobservable – mechanisms behind the lateral atomic structure of deformation twins in hcp. Twinning is a major plastic deformation mode in hcp metals at room temperature, and influences the ductility and formability of hcp metals. Deformation twinning plays a relevant role in plastic deformation of these materials. Improving plastic forming and properties requires a basic understanding of twinning mechanisms. This improved understanding should help researchers develop better alloys, processing routes, and material models for designing materials with the desired properties and microstructures. The journal Nature Communications published the research.

Twin propagation involves 3-D normal, forward, and lateral motion of twin interfaces with respect to the twinning shear direction. Until now, the materials community only considered forward and normal propagation, while disregarding the lateral twin expansion. The atomic structures and motion mechanisms of the boundary lateral to the shear direction of the twin has never been characterized at the atomic level, because such boundary is, in principle, crystallographically unobservable. Therefore, it is called the “dark side” of the twin.

Figure 9. Schematic ellipsoidal morphology of a three-dimensional twin. η1 is the twinning shear direction; k1 is the normal to the twinning plane. The bright side examines the forward and normal propagation. The dark side examines the lateral propagation. In the bright side, twin propagates through the glide of twinning dislocations along the twinning direction. In the dark side, twin propagates through the migration of semi-coherent interfaces, where the pinning effect of misfit dislocations will result in the irregular twin shape.

The team used the capabilities of LANL’s Electron Microscopy Laboratory and expertise in molecular dynamics simulations to determine that the lateral atomic structure of deformation twins in magnesium is a serrated boundary composed of coherent twin boundaries and semi-coherent twist prism boundaries that control the growth. The work characterizes a novel aspect of twinning: the configuration and mobility of the dark side, and the role that it plays on overall twin propagations. This information could lead to a better understanding of the mechanism of twinning and its contribution to deformation. The results should apply to the same twin mode in other hexagonal close-packed materials, and the conceptual ideas should be relevant for all twin modes in crystalline materials.

Reference: “Characterizing the Boundary Lateral to the Shear Direction of Deformation Twins in Magnesium,” Nature Communications 7, 11577 (2016); doi: 10.1038/ncomms11577. Authors: Yue Liu, Shuai Shao, R.J. McCabe, and Carlos N. Tomé (Materials Science in Radiation and Dynamics Extremes, MST-8); Mingyu Gong and Jian Wang (University of Nebraska – Lincoln); Nan Li (Center for Integrated Nanotechnologies, MPA-CINT); and Y. Jiang (University of Nevada – Reno).

The DOE Office of Science, Office of Basic Energy Sciences, Materials Sciences and Engineering Division funded the research, which was performed in part at the Center for Integrated Nanotechnologies (CINT), a DOE Office of Science User Facility. The work supports the Lab’s Energy Security mission area and Materials for the Future science pillar through the development of additive manufacturing to produce materials of controlled functionality. Technical contacts: Carlos N. Tomé and Yue Liu

Physics

Studying nucleosynthesis using high-powered lasers

Photo. Internal view of the University of Rochester OMEGA Laser's target chamber during a target shot. Photo credit: University of Rochester

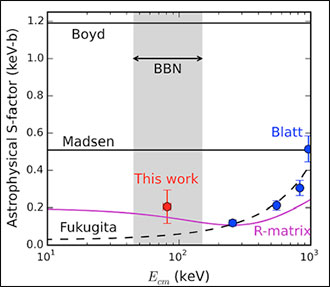

For the first time, researchers have used laser-generated plasmas to study a nuclear reaction relevant to Big-Bang nucleosynthesis (the formation of new atomic nuclei by nuclear reactions, thought to occur in the interiors of stars and in the early stages of development of the universe). Astrophysicists have observed anomalously high levels of lithium-6 (6Li) in primordial material, but the source of this 6Li is unknown. One candidate has been the tritium plus helium-3 (T+3He) fusion reaction, which could potentially produce excess 6Li during the Big Bang. Until now, the reaction rate, or cross section, for T+3He production of 6Li was not well enough known to constrain models of primordial nucleosynthesis. By using a high-powered laser to create a hot inertially confined plasma, investigators are now able to study this reaction. The journal Physical Review Letters published their findings.

Figure 10. The astrophysical S-factor for the T+3He fusion reaction, showing data from this work (red), which is the first in the Big-Bang nucleosynthesis (BBN) energy range. (The S-factor is a rescaled variant of the total cross section that accounts for the Coulomb repulsion between charged reactants.) The data are consistent with older accelerator data (blue) and new nuclear theory (magenta), but show a discrepancy with values used in astrophysical models (black).

This work is a fundamentally new way to study nuclear physics using high energy density inertially confined plasmas. Alex Zylstra and Hans Herrmann (Plasma Physics, P-24) led a team using the University of Rochester’s OMEGA laser to illuminate the outer surface of a glass micro balloon containing T3He fuel, driving a strong shock that heated and compressed the fuel. The process generated thermonuclear fusion reactions. Data indicate that the T+3He reaction rate does not explain the observed 6Li abundances. This result may preclude the T+3He reaction to explain the anomaly. The data reveal that current astrophysical models use inaccurate reaction rates, which can now be updated for improved accuracy. The team found good agreement with new R-matrix nuclear calculations. (The R-matrix is computational quantum mechanical method to study resonances in nuclear reactions.)

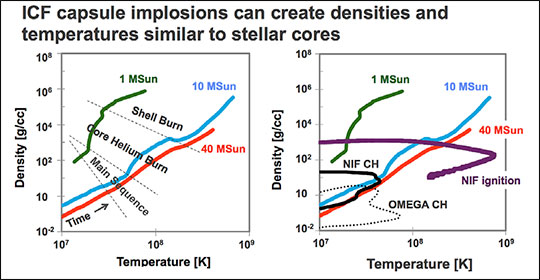

Stellar evolution models rely heavily on the measurement of nuclear reaction cross sections to determine the rate of production and destruction of the elements throughout the life of the stars. Although most nuclear reactions relevant to astrophysics have been studied with accelerator beam experiments, this work used a plasma at conditions comparable to the plasmas found in the universe. Because plasmas are a fundamentally different environment for nuclear reactions than accelerator-beam experiments, this is a valuable new way to study nucleosynthesis. Researchers could use this technique to study many other reactions that produced elements heavier than hydrogen during the Big Bang or in stellar interiors.

Reference: “Using Inertial Fusion Implosions to Measure the T + 3He Fusion Cross Section at Nucleosynthesis-Relevant Energies,” Physical Review Letters 117, 035002 (2016); doi: 10.1103/PhysRevLett.117.035002. Authors: A. B. Zylstra (P-24 and Massachusetts Institute of Technology), H. W. Herrmann and Y. H. Kim (P-24), M. Gatu Johnson, J. A. Frenje, C. K. Li, H. Sio, F. H. Séguin, and R. D. Petrasso (Massachusetts Institute of Technology); G. Hale and M. Paris (Nuclear and Particle Physics, Astrophysics and Cosmology, T-2); M. Rubery (Atomic Weapons Establishment, AWE); A. Bacher (Indiana University); C. R. Brune (Ohio University); C. Forrest, V. Yu. Glebov, R. Janezic, T. C. Sangster, W. Seka, and C. Stoeckl (University of Rochester); D. McNabb and J. Pino (Lawrence Livermore National Laboratory); and A. Nikroo (General Atomics).

Figure 11 Comparison of densities and temperatures of stellar cores and ICF (inertial confinement fusion) conditions. (National Ignition Facility, NIF and OMEGA laser). M is solar mass units, and CH is a hydrocarbon shell. Stellar evolution simulations by David Dearborn (Lawrence Livermore National Laboratory), NIF simulations by Harry Robey and Robert Tipton (Lawrence Livermore National Laboratory), and OMEGA simulation by P. B. Radha (University of Rochester).

NNSA Campaign 10 funded the development of the gamma-ray diagnostics that are primarily used for programmatic work and also enabled this experiment. The Laboratory Directed Research and Development (LDRD) program funded this specific experiment. The work supports the science underpinning the Lab’s Nuclear Deterrence mission area and the Nuclear and Particle Futures science pillar. Technical contact: Alex Zylstra

Sigma

Preparation of a fully dense copper/tungsten composite for mesoscale material science

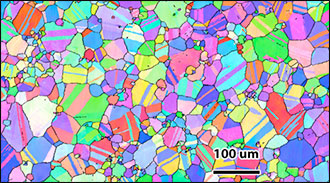

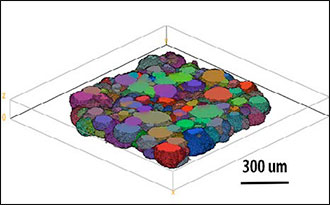

Polycrystalline materials exhibit heterogeneous behavior when subjected to external loading conditions. Initial microstructure plays a significant role in contributing to this heterogeneous response. Carefully designed and measured experimental data are required to develop a predictive model that can capture this heterogeneity. To achieve these goals, researchers achieved a fully dense copper/tungsten (Cu/W) composite using a powder metallurgy technique at the Sigma Complex and Electron Microscopy Laboratory, in conjunction with the Cornell High Energy Synchrotron Source. The Journal of Materials Science published their research.

Understanding the failure mechanisms of structural materials is critical to develop more reliable components, but doing so usually relies on post-failure analysis, which discerns only the final accumulation of void or crack initiation, growth, and coalescence. This standard post-mortem analysis is insufficient to understand how to control damage processes at the mesoscale, the scale between atomic and macroscopic that has a significant influence on materials’ macroscopic behaviors and properties. A 2-D observation has limited applicability due to the small chance that void/crack nucleation will occur on the observed plane. High energy diffraction microscopy (HEDM), enables 3-D characterization of structural polycrystalline materials, providing tomography, crystal orientation fields, and local stress mapping. The method allows researchers to observe void/crack initiation, growth, and coalescence nondestructively at the mesoscale.

The team screened argon atomized copper powder to remove extremely large particle fraction. They used the remaining material as raw powder. The investigators milled and screened the tungsten powder to collect the tungsten particles. Hot pressing the screened Cu/W powder at 900°C in hydrogen gas (H2) removed most of the surface oxygen. The density of the Cu/W composite hot pressed at 950°C in pure H2 was about 94% theoretical density. Additional hot isostatic pressing at 1050°C in argon gas resulted in a fully dense structure with the designed Cu grain size and W particle distribution.

Figure 12. (Top): Electron back scattering diffraction micrograph showing the uniform grain size distribution compared to the cast and rolled materials. (Bottom): HEDM micrograph showing the 3-D tomographic grain distribution of the Cu/W composite.

Reference: “Processing and Consolidation of Copper/Tungsten,” Journal of Materials Science 52, 1172 (2017); doi: 10.1007/s10853-016-0413-7. Authors: Ching-Fong Chen, Michael J. Brand, Eric L. Tegtmeier, and David E. Dombrowski (Sigma Division, Sigma-DO); Reeju Pokharel, Bjorn Clausen, and Ricardo A. Lebensohn (Materials Science in Radiation and Dynamics Extremes, MST-8); and T. L. Ickes (Applied Engineering Technology, AET-6).

The Laboratory Directed Research and Development (LDRD) program funded the work, which supports the Laboratory’s Energy Security mission area and Materials for the Future science pillar. The predictive capabilities of such mesoscale research and modeling could be useful for a variety of materials research. The Lab’s proposed MaRIE (Matter-Radiation Interactions in Extremes) experimental facility could usher this research into the next generation by designing the advanced materials needed to meet modern national security and energy security challenges. Technical contact: Chris Chen

Theoretical

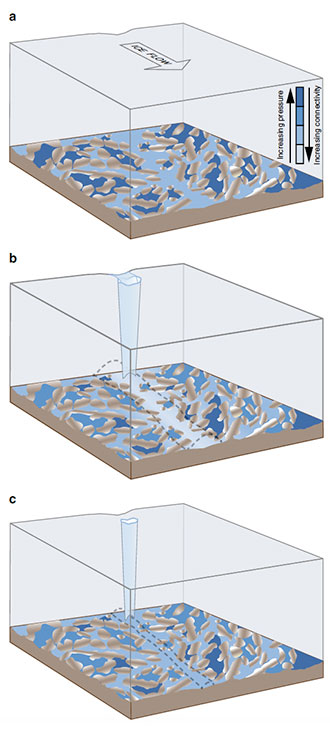

Modeling the dynamics of the Greenland Ice Sheet

Surface meltwater that drains to the bed of the Greenland Ice Sheet each summer causes variations in ice flow that cannot be fully explaining by prevailing theories. A research team, led by Matthew Hoffman and Stephen Price (Fluid Dynamics and Solid Mechanics, T-3), has found that changes in extensive, sediment-choked subglacial “swamps” explain why the ice sheet’s movement slows in late summer and winter. The new model extends the long-recognized complexity of terrestrial hydrology to the historically simplified understanding of hydrology beneath glaciers, expanding the conceptual model of subglacial hydrology. Nature Communications published their findings.

Figure 13. Conceptual model of a three-component subglacial hydrologic system for the Greenland Ice Sheet. (a) During the onset of the melt season, a large fraction of the bed is composed of weakly connected cavities at a higher water pressure than the surrounding distributed system. (b) In the middle of melt season, meltwater draining from the surface through moulins is largely accommodated by the formation of efficient channels (dashed grey outline). Concurrently, some of the weakly connected cavities have leaked water, lowering their water pressure, due to increasing connectivity with the rest of the system initiated during periods of pressurization. (c) At the end of melt season, channels collapse within days after melt inputs cease. The partially drained weakly connected cavities take months to recharge by basal melting, leaving higher integrated basal traction than before summer began.

Surface meltwater draining to the bed of the Greenland Ice Sheet each summer causes the ice sheet flow towards the ocean to double or more in speed until subsequent increases in the efficiency of the subglacial drainage system accommodate the water over months, eventually slowing the ice sheet in fall and winter. These ice flow changes cannot be fully explained by the prevailing theory of channelizing subglacial drainage, suggesting that the existing theory is missing an important process. The study assessed whether changes in the weakly connected, swampy areas of the bed, which have been ignored previously, can explain why the ice sheet movement slows in late summer and winter.

The team developed a new model for weakly connected subglacial drainage and coupled it to an existing subglacial drainage model that included distributed and channelized flow. Researchers based the new model on water pressure measurements from boreholes to the bed of the Greenland Ice Sheet sampling regions of the bed that have a weak connection to the surrounding system. The measurements reveal water pressure variations that are out of phase with the surface meltwater entering the glacier through moulins (shafts) and crevasses. When applied to a well-studied field site in Greenland by forcing the enhanced model with measurements of meltwater input to the ice sheet, the model matches the water pressure variations measured in different parts of the subglacial drainage system. The modeled ice speed shows the same seasonal pattern as the observations – the same water levels in the moulin that drains water to the bed results in slower ice speed later in the summer. This is due to the weakly-connected regions of the bed that become better connected as the basal drainage system grows during summer, causing some of these high pressure areas to leak stored water. Lower water pressure and less lubrication of the bed lead to slower ice motion. A control simulation that excludes the new weakly-connected drainage model could not reproduce the observed late summer slowdown of ice speed.

The new model results indicate that the existing conceptual model for subglacial drainage needs to be expanded to include the new component of weakly-connected drainage. The research answers a longstanding question regarding the cause of the slowdown of the Greenland Ice Sheet in late summer and fall. This slow draining of swampy subglacial backwaters causes an important regulation on the ice flow response to summer lubrication of the bed. Therefore, these areas may critically control how the Greenland Ice Sheet responds to future changes in melt and the fate of the seven meters of potential sea level rise frozen within the ice sheet.

Reference: “Greenland Subglacial Drainage Evolution Regulated by Weakly Connected Regions of the Bed,” Nature Communications 7, 13903 (2016); doi: 10.1038/ncomms13903. Authors: Matthew J. Hoffman and Stephen A. Price (Fluid Dynamics and Solid Mechanics, T-3), Lauren C. Andrews and Thomas A. Neumann (NASA Goddard Space Flight Center), Ginny A. Catania (University of Texas – Austin), Martin P. Lüthi (University of Zürich), Jason Gulley (University of South Florida), Claudia Ryser (Swiss Federal Institute of Technology), Robert L. Hawley (Dartmouth College), and Blaine Morriss (Cold Regions Research and Engineering Laboratory).

The Laboratory Directed Research and Development (LDRD), Earth System Modeling Program of the Office of Biological and Environmental Science within DOE’s Office of Science, NASA, and National Science Foundation funded different aspects of the LANL work. The research supports the Lab’s Global Security mission area and the Information, Science and Technology science pillar through the development of subglacial hydrology models. Technical contact: Matthew Hoffman