Science Highlights, May 31, 2017

Awards and Recognition

Accelerator Operations and Technology

Developing autonomous particle accelerators that tune themselves

Capability Enhancement

Archiving project preserves and standardizes critical weapons data

New method measures plutonium for nuclear forensics applications

Computer, Computational and Statistical Sciences

Combining graph and quantum theories to calculate electronic structure of systems

Earth and Environmental Sciences

Using pressure to enhance the properties of lead-free hybrid perovskite

Materials Physics and Applications

Influence of dielectric environment on excitons in atomically thin semiconductors

Materials Science and Technology

Coupled experimental and computational studies enable materials damage prediction

Awards and Recognition

NNSA Defense Programs Awards of Excellence presented

The awards recognize significant achievements in quality, productivity, cost savings, safety, or creativity in support of NNSA’s Stockpile Stewardship Program. Defense Programs has elevated two teams for “exceptional achievement”: the Level 1 Pit Reuse and the Alt 940 teams. Brigadier General Michael Lutton (NNSA Principal Assistant Deputy Administrator for Military Application) and Bob Webster (Principal Associate Director of Weapons Programs, PADWP) presented the awards during a ceremony at the Laboratory. Technical contact: Jon Ventura

Level 1 Pit Reuse Team

2015 Level 1 Pit Reuse Milestone: John Scott (Integrated Design and Assessment, XTD-IDA) and Ray Tolar (formerly Lawrence Livermore National Laboratory and currently X-Theoretical Design, XTD-DO) led a Los Alamos and Lawrence Livermore National Laboratory team, which established the technical basis for physics certification of a primary that utilizes an existing pit. The team demonstrated this by: simulating Nevada Test Site events related to pit re-use, identifying areas of uncertainty, and providing bounds on those areas of uncertainty.

In the photo: Level 1 Pit Reuse Team (From left) John Scott, Scott Crockett, Ray Tolar, Bill Blumenthal, General Lutton, and Bob Chrien. Not pictured: M. Andrews, R. Carver, N. Denissen, B. DeVolder, F. Doss, R. Gore, A. Hunter, D. Jablonski, M. Rightley, E. Rougier, D. Sandoval, M. Schraad, L. Welser-Sherrill, S. Sjue, M. Williams, J. Pedicini, G. Wall, S. Chitanvis, C. Nakhleh, and M. Sheppard (LANL); M. Aufderheide, G. Bazan, D. Goto, J. Greenough, C. Harrison, J, Heidrich, J. Hsu, J. Jeffries, A. Kupresanin, T. McAbee, F. Najjar, B. Pudliner, A. Puzder, T. Rose, A. Schach von Wittenau, D. Stevens, and B. Yang (Lawrence Livermore National Laboratory).

ALT 940 Design Team

ALT 940 LANL Design: Larry Brooks (B 61-12 Life Extension Program, Q-15) led the ALT 940 Design Team in an innovative approach for the ALT 940 Integrated Surety Architecture. The design reached an advanced stage in a matter of months, including prototype demonstrators, a detailed qualification plan, a product maturation plan, and a budgetary cost estimate.

In the photo: ALT 940 Design Team (From left) David Moore, Larry Brooks, Will Schmitz, Brian Olsen, Lee Anderson, General Lutton, Manny Tafoya, and Matt Lee. Not pictured: R. Bishop, J. Velasquez, R. Ortiz, A. M. Peters, I. Maestas, S. Booth, B. Meyer, A. Gonzales, J. Borrego, J. Rau, E. Bauer, B. Davis, T. Semelsberger, B. Faulkner, J. Hicks, C. Hamilton, J. Veauthier, S. Richmond, J. Anderson, B. Weaver, and K. Swan-Bogard.

Eulerian Applications Project Modernization for Advanced Supercomputers Team

Eulerian Applications Project Modernization for Advanced Supercomputers: Joann Campbell (Eulerian Codes, XCP-2) led a team that carried out a successful modernization of the Eulerian Applications Project code base, resulting in a more agile, modular, maintainable, and optimized infrastructure. This work ensures the ability to run the code on the next generation high performance super computers like Trinity, an essential tool supporting Directed Stockpile Work (DSW) mission requirements.

In the photo: Eulerian Applications Project Modernization for Advanced Supercomputers Team (From left) Zach Medin, Joann Campbell, and Sriram Swaminarayan. Not pictured: C. Aldrich, M. Calef, J. Cohn, G. Dilts, J. Grove, S. Halverson, M. Daniels, P. Fasel, C. Ferenbaugh, M. Hall, A. Hungerford, T. Kelley, T. Masser, M. Mckay, R. Menikoff, G. Rockefeller, B. Robey, J. Sauer, C. Snell, M. Snow, C. Wingate, and J. Wohlbier.

Eolus Weapons Analysis Team

Eolus Weapons Analysis Team: Avneet Sood (Monte Carlo Codes, XCP-3) and a team successfully completed a 4-year effort to modernize the Nuclear Weapons Complex’s only diagnostic and analysis code. This unique capability supports both Stockpile Stewardship and Global Security missions. This code has been the fundamental link to predict and interpret weapons diagnostics measured at Nevada Test Site as well as Above Ground Experiment (AGEX) facilities. This capability is in use today to help design the Enhanced Subcritical Experiments Facility at U1A and the Neutron Diagnosed Subcritical Experiments that will be fielded there.

In the photo: Eolus Weapons Analysis Team (From left) Tim Goorley, Tony Zukaitis, Jerewan Armstrong, and Avneet Sood. Not pictured: R. W. Brewer, L. J. Cox, R. A. Forster III, B. C. Kiedrowski, J. P. Lestone, M. E. Rising, and C. J. Werner.

B61-12 Environmental Specification Team

B61-12 LEP Environmental Specifications Team: Stuart Taylor (Mechanical and Thermal Engineering, AET-1) and the B61-12 Life Extension Program (LEP) Environmental Specifications Team created a high programmatic-impact data analysis and archiving tool called Echo that is critical to the success of the LEP qualification program. Echo has enabled the delivery of the first environmental specifications document for the B61-12 Life Extension Program. Echo is also being applied to the W88 refresh program.

In the photo: B61-12 Environmental Specification Team (From left) Dustin Harvey, Colin Haynes, Stuart Taylor, General Lutton, and John Heit.

Weapons Experimental Device Assembly Production Team

Weapons Experimental Device Assembly Production Team: Robert Gonzales (Technology Development, Q-18) and team worked diligently to meet an ever-increasing need for experimental packages to support Stockpile Stewardship. The experiments are one off and require very specific and unique assemblies with large numbers of components, both newly manufactured and taken from the war reserve. The team’s creativity and innovative thinking enabled delivery of 332 experimental packages in 2015, an increase of 86 units over 2014.

In the photo: Weapons Experimental Device Assembly Production Team (From left) Todd Kimbrough, Brenda Quintana, Timothy White, Berlinda Trujillo, General Lutton, Julie Chavez-Hare, Rorke Murphy, and Rudy Originales. Not pictured: R. B. Ader, A. D. Archuleta, M. A. Echave, D. A. Gallant, R. D. Gonzales, M. A. Griego, L. A. Gurule, I. E. Herrera, L. R. Maez, J. D. Martinez, M. G. Martinez, R. J. Miller, J. V. Montoya, T. A. Mulder, J. E. Redding, A. L. Reeves, J. B. Richardson, J. O. Sutton, C. Tagart, M. H. Tompkins, D. C. Velasquez, M. Vigil, and D. R. Villareal.

B61-LEP Flight Test Team

B61-12 LEP Flight Test Team: Lance Kingston (B61-12 Life Extension Program, Q-15), and the B61-12 Life Extension Program (LEP) Flight Test Team contributed to the first three flight tests of the B61-12 at the Tonopah Test Range between July and October 2015. For each test, the team designed, assembled, and instrumented the Laboratory’s subassemblies that comprise the joint test assembly (JTA) nuclear explosives package. They delivered these subassemblies and oversaw the integration of them into the all up rounds ensuring proper form, fit, and connectivity during system assembly. The team participated in flight testing at the Tonopah Test Range, providing real-time health assessments of nuclear explosives package sensors during each test.

In the photo: B61-LEP Flight Test Team (From left) Stuart Taylor, Michael Collier, General Lutton, and John Heit. Not pictured: L. A. Kingston, P. O. Leslie, R. M. Renneke, M. H. Belobrajdic, and K. M. Terrones.

Neutron Imaging of Weapons Component Team

Neutron Imaging of Weapon Components: James Hunter (Non-Destructive Testing and Evaluation, AET-6) and colleagues have made exceptional progress over the last two years in the use of neutrons to image weapon components. The team used state-of-the-art hardware coupled with the extremely powerful neutron source at LANSCE to examine a variety of components across the active stockpile.

In the photo: Neutron Imaging of Weapons Component Team (From left) Frans Trouw, Ron Nelson, General Lutton, and Michelle Espy. Not pictured: J. Hunter, R. Schirato, T. Cutler, D. Mayo, A. Swift, and B. Temple.

LANL Weapons Response to W76/W78 Isolator Issue Team

LANL Weapon Response to W76/W78 Isolator Issue: Sandia National Laboratories informed Ginger Atwood (Technology Development, Q-18) that a previously unknown potential manufacturing defect in a Sandia isolator could affect Pantex operations. Weapon Response and Military Liaison (W-10) and Explosive Applications and Special Projects (M-6) conducted a series of quick turn-around experiments to obtain the required data for analysis. W-10 provided new weapon response that reduced the conservatism of the prior weapon response under which Pantex was operating. The data enabled Pantex to use fewer controls in their processing operations while retaining a well-characterized and documented safety margin.

In the photo: LANL Weapons Response to W76/W78 Isolator Issue Team (From left) Paul Peterson, Francis Martinez, Ginger Atwood, Dan Brovina, Mark Mundt, and General Lutton. Not pictured: K. Rainey, B. Matavosian, P. Dickson, P. Rae, T. Foley, A. Novak, E. Baca, C. Armstrong, J. Gunderson, and J. Shipley.

Accelerator Operations and Technology

Developing autonomous particle accelerators that tune themselves

Particle accelerators are powerful scientific tools, providing electron, proton, neutron, and high-energy x-ray beams to probe materials and their dynamics. Accelerators are also very complex, having thousands of coupled, time-varying, and nonlinear components, which present tuning and control challenges. For example, free electron lasers (FEL) such as the Linac Coherent Light Source (LCLS) at the SLAC National Accelerator Laboratory, require tens of hours of manual tuning to switch machine settings (pulse width, charge, beam energy, etc.) for various users. These difficulties cannot be resolved by model-based feedback controllers alone, which are limited by uncertainties, approximations, and un-modeled disturbances. These challenges will be compounded in next generation light sources such as LCLS-II and the Laboratory’s proposed MaRIE XFEL, whose energy, coherence, brightness, and repetition rate goals are beyond the current state of the art.

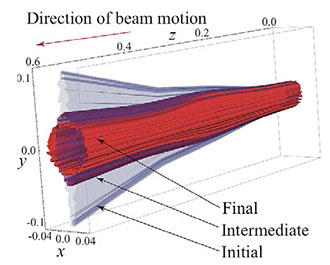

Figure 1. Simulation depicts automatic magnet tuning with ESC for beam size minimization.

Alexander Scheinker (RF Engineering, AOT-RFE) has been developing a feedback-based form of Artificial Intelligence (AI), which enables complex systems to tune themselves in response to unexpected disturbances in real time. The method is known as extremum seeking for control and stabilization (ESC). ESC is model independent and is applicable to wide range of systems including autonomous vehicle guidance, material science, plasma energy systems, and non-invasive electron beam property prediction. Scheinker and collaborators are applying ESC for automated tuning of the LANSCE RF and magnet systems, as well as the LCLS FEL. Figure 1 shows a simulation of ESC beam focusing by groups of quadrupole magnets. The journal IEEE Transactions on Control Systems Technology published an in-hardware demonstration of ESC for autonomous accelerator tuning at SLAC, and the book Model-Free Stabilization by Extremum Seeking presented a general overview of ESC.

Although accelerators have diagnostics, automatic digital control systems, and sophisticated beam dynamics models, it is impossible to use a look up table to switch quickly between different operating conditions with the push of a button. For example, during regular operation at the oversubscribed LCLS FEL facility, up to 6 hours of a valuable 12-hour beam time is sometimes wasted on manual tuning to provide users with their desired light properties. The sources of such tuning difficulty include complex collective effects. Operators also spend hours hand tuning machine settings to maintain steady state operation.

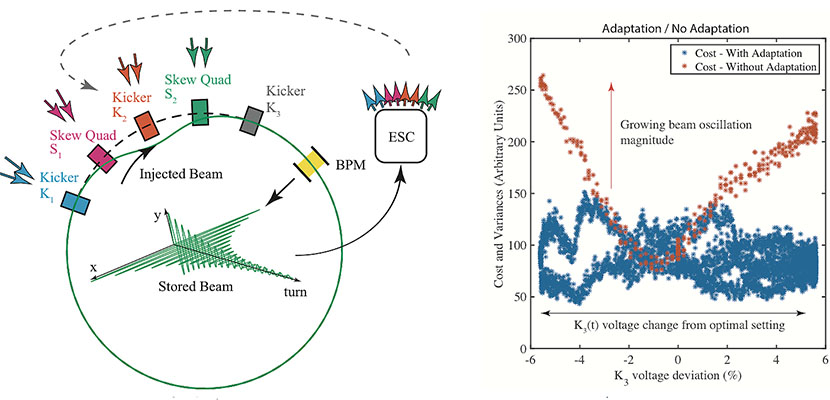

Particle accelerators have two major classes of components: 1) electromagnetic cavities for beam acceleration and longitudinal focusing and 2) magnets for beam steering and transverse focusing. Scheinker and collaborators aimed to demonstrate that ESC could safely, autonomously tune a group of magnets to optimize electron beam dynamics despite time-varying, unknown disturbances. The researchers conducted an ESC demonstration on the SPEAR3 light source at SLAC where a group of five magnets is used to kick the electron beam in and out of orbit. Although the beam is kicked only in the horizontal direction, nonlinearities and higher order field components in magnets throughout the machine couple horizontal and vertical electron motion, creating a complicated coupling between horizontal dipole kicker magnets and quadrupole and skewing quadrupole magnets. An imperfect match of magnetic field strengths and pulse widths causes the electron beam to perform betatron oscillations about its design orbit, which must naturally damp out (due to synchrotron radiation) before the electrons are useful for experimenters. The LANL/SLAC team demonstrated that ESC could autonomously and continuously re-tune four of the kicker system magnets in response to unknown time-varying changes of the fifth kicker magnet. ESC maintained close to optimal electron injection matching.

Figure 2. (Left): ESC actively tuned 8 parameters (pulse widths and field strengths of 4 magnets) based on a scalar measurement of beam oscillations provided by a beam position monitor (BPM) for time-varying magnetic field strength [K3(t)]. (Right): Beam oscillation magnitudes increase without ESC feedback (red) and are minimized continuously by ESC (blue).

References:

“Minimization of Betatron Oscillations of Electron Beam Injected into a Time-Varying Lattice via Extremum Seeking, ” IEEE Transactions on Control Systems Technology, (2017); doi: 10.1109/TCST.2017.2664728. Authors: A. Scheinker (AOT-RFE), X. Huang and J. Wu (Stanford National Accelerator Laboratory.

LANSCE operations fund the application of ESC to the LANSCE accelerator RF and magnet systems. A Laboratory Directed Research and Development (LDRD) project sponsors a collaborative effort for automated tuning of the LCLS FEL.

The work supports the Lab’s Nuclear Deterrence mission area and the Nuclear and Particle Futures, Materials for the Future, and Information, Science and Technology science pillars. Enhancing the performance and reliability of accelerators could benefit Laboratory programs that require materials data. For example, the Lab’s LANSCE for neutrons and proton radiography, LCLS for coherent short X-rays flashes for dynamics studies, and the Advanced Photon Source (APS) at Argonne for hard x-ray 3D material imaging collect materials data for Lab programs. Moreover, ESC could have a direct impact on capabilities to realize the extreme electron beam phase space requirements of the LCLS-II FEL and the Laboratory’s proposed MaRIE. Technical contact: Alexander Scheinker

Bioscience

Lab hosts Sequencing, Finishing, and Analysis in the Future conference

- Genome Sequencing

- Genome Assembly

- Genome Improvement

- Genome Analysis

- Applications of Next Generation Sequencing

Los Alamos conference organizers included Patrick Chain and Shannon Johnson (Biosecurity and Public Health, B-10), and David Bruce (Bioscience, B-DO). Richard Sayre (Bioenergy and Biome Sciences (B-11), Jean Challacombe and Patrick Chain (B-10) gave talks, and Laboratory researchers presented nine posters.

Based on the Laboratory’s strong reputation in genomics and bioinformatics – from developing protocols to ensuring high quality data to creating novel analysis software – the Department of State and the Department of Defense rely on Los Alamos to perform a leadership role in Cooperative Threat Reduction programs. As part of this engagement, the Laboratory trains scientists at partner institutions worldwide to use genomics to detect disease quickly. One such training has been held on site at the Lab each year for the last five years. This year 29 guest attendees from nine countries participated. Technical contacts: Patrick Chain and Tracy ErkkilaCapability Enhancement

Archiving project preserves and standardizes critical weapons data

The Laboratory performed 405 underground tests of nuclear devices at the Nevada Test Site from 1962 through the end of testing in 1992. In addition to radiological analysis of test debris, all of these tests included prompt measurements of the flux of gamma photons, called the gamma reaction history, from the device. Researchers recorded this prompt data almost exclusively on film. A typical nuclear event produced 70-150 reaction history data films as well as more than 500 supporting documents, some printed and some handwritten. Many tests included measurements of the flux of neutrons from the device, which contributed many more pieces of film and paper documents. The films are subject to aging effects such as physical shrinkage, which has serious consequences for the calibration of the data recorded on the film. Paper documents are vulnerable to aging effects such as discoloration or breakdown of the ink.

Due to the Nuclear Test Ban Treaty in 1992, all efforts related to the maintenance, certification, or updating of the nuclear stockpile depend on this data. Development of 2D and 3D supercomputer simulations of nuclear weapons for stockpile certification or global security applications rely on this experimental data for verification. Therefore, it is critical to convert this priceless data to a digital form for preservation.

Photo. The gamma reaction history documents and films archive. All the document folders visible in this photo relate to reaction history and have been converted into the electronic form.

Los Alamos and National Security Technologies, LLC (NSTec) have scanned the entire reaction history film and document holdings, a campaign that has taken about 12 years. The work produced a complete and fully digitalized, standardized, and easily searchable secure archive of all gamma reaction history measurements from the Lab’s underground nuclear events 1962-1992. This achievement has greatly enhanced the accessibility and usefulness of the records. The archive includes: data films, all the experiment related documents, the original experiment analyses for all the nuclear events, and copies of all the later reanalyses and research on the data. The data are readily available to analysts in Physics (P) Division and to designers and modelers in X Theoretical Design (X-TD) and Computational Physics (X-CP) divisions.

The team plans to scan all of the film for neutron diagnostics for the nuclear events by the end of FY17. Scanning the paper documentation will require more time. Full modern analyses, including uncertainty quantification, of the reaction history data from 80 shots and the neutron diagnostics data from 55 shots have been completed to date.

Barry Warthen (Hydrodynamic and X-ray Physics, P-22) initiated the work in 2005. Hanna Makaruk (Applied Modern Physics, P-21) led the campaign from 2011 to present. Yvette Maes (P-21) has coordinated the day-to-day effort, maintaining a master table of progress, acting as liaison to NSTec, and organizing and maintaining the electronic archive. June Garcia (P-21) maintained and populated the archive. Robert Hilko and William Gibson (NSTec) scanned films from 249 underground nuclear events and 28,000 pages of documents from 54 tests.

The Data Validation and Archiving project, NNSA Verification and Validation of the Advanced Simulations and Computing (ASC) program led by Fred Wysocki funded the work, which supports the Lab’s Nuclear Deterrence mission area and the Nuclear and Particle Futures science pillar. Technical contact: Hanna Makaruk

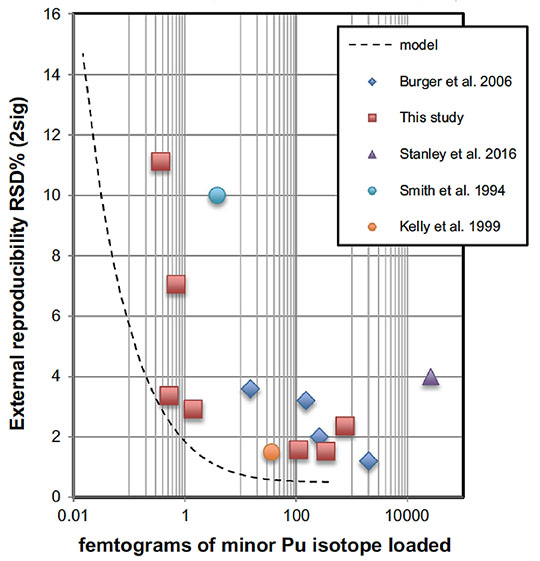

New method measures plutonium for nuclear forensics applications

Researchers in the Nuclear and Radiochemistry Group (C-NR) have developed a precise method of analyzing plutonium using thermal ionization mass spectrometry (TIMS). The technique has applications for nuclear forensics, worker health and safety, and environmental measurements. The Journal of Radioanalytical and Nuclear Chemistry published the findings.

These applications require precise isotopic measurements of minuscule amounts of material to aid in the characterization of radionuclides such as uranium and plutonium. TIMS is an analytical technique that is often used for this type of analysis. The technique involves loading a purified sample onto a filament, which is then heated to promote the ionization required for mass spectrometer analysis. Conventional TIMS instruments employ an array of Faraday collectors for the isotopic analysis of the ionized sample. However, the nanogram-sized samples needed for precise measurements using Faraday collectors limit the scope of forensic work that can be undertaken. Analysis at the picogram and sub-picogram level, where the filament may ionize just a few ions per second of a minor isotope, requires the use of specialized ion counting detectors. Because most TIMS instruments available today only employ a single specialized ion counter, these instruments do not allow simultaneous detection of each isotope of plutonium in a sample. The single ion counter reduces the number of ratio measurements that can be measured in the short time that the sample lasts, thereby limiting the precision of the analysis. Inaccuracy could also be introduced into the analysis if the signal drifts during the switch from one isotope measurement to another.

The Los Alamos work avoids the pitfalls of conventional TMS analysis. The new method measures all isotopes of interest simultaneously by combining total evaporation analysis and a customized TIMS instrument populated with multiple ion counters. This innovative technique is called multiple ion counter total evaporation (MICTE).

Figure 3. External reproducibility of plutonium (Pu) isotope ratio measurements as a function of sample size when using MICTE. Dashed line represents what is theoretically achievable using MICTE. Red squares indicate actual data using the MICTE method in the C-NR clean lab facility and are taken from repeated measurements of the certified reference materials analyzed in the study.

Traditional total evaporation analyses employ a static arrangement of Faraday cups for simultaneous measurement of the ion signal from each of the isotopes in a sample. Dividing the total summed signal intensities from the cups in question determines the isotope ratios. Multiple ion counter total evaporation applies the same principles as traditional total evaporation methods, but the array of Faraday cups is replaced by a similar arrangement of six specialized ion counters. The ion counters provide high amplification with inherently low noise to enable the detection of the very small ion currents generated during the analysis of picogram-sized plutonium samples. The researchers used this multiple ion counter total evaporation procedure for the precise analysis of the minor isotopes in samples down to the sub-femtogram level. These advances in instrumentation could allow significant improvements to be made in the analysis of plutonium at ultra-low levels for a variety of monitoring applications, including the Laboratory’s bioassay program, nuclear forensics, and the environment

Reference: “A Multiple Ion Counter Total Evaporation (MICTE) Method for Precise Analysis of Plutonium by Thermal Ionization Mass Spectrometry,” Journal of Radioanalytical and Nuclear Chemistry 312, 663 (2017); doi: 10.1007/s10967-017-5259-1. Authors: Jeremy D. Inglis, Joel Maassen, Azim Kara, Robert E. Steiner, William S. Kinman, and Dennis Lopez (Nuclear and Radiochemistry, C-NR).

The Laboratory’s bioassay program funded the efforts to improve the low-level capabilities of TIMS measurements. The improvements to existing TIMS techniques will provide better sensitivity and precision for measurements used to monitor potential worker exposures to radionuclides as part of the Laboratory’s in vitro bioassay program. The work supports the Laboratory’s Global Security mission area and the Science of Signatures science pillar. Technical contact: Jeremy Inglis

Chemistry

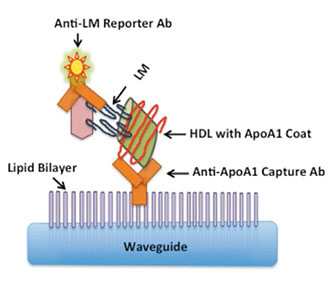

Tuberculosis field test for cattle

Early and rapid detection of bovine tuberculosis (TB) is critical to control its spread in cattle and other animals. Costly quarantines and the mass slaughter of infected cattle can result from efforts to control the disease. In an article in the journal Analytical Sciences, Lab researchers and collaborators report the first direct blood-based, field test for diagnosing bovine TB.

TB infects both animals and humans. The pathogen Mycobacterium bovis causes the disease in cattle. It easily spreads among large herds, resulting in the quarantine and destruction of thousands of cattle in the United States, Canada and abroad. TB also infects wild deer and elk, which can pass it on to domestic cattle with which they graze. The skin test that is currently used to detect exposure or infection in cattle is problematic. Due to various colors of cows’ skin and hide, environmental exposures, previous testing for M. bovis, and other factors, testing is often inconclusive. An additional challenge is gathering a herd twice – once for testing and again 72 hours later to interpret the skin test.

Figure 4. Schematic of the lipoprotein capture assay. When the lipomannan (LM) biomarker for bovine TB attaches to the lipid bilayer on the waveguide, it generates a detectable signal. HDL is high density lipoprotein, anti-ApoA1 Capture Ab is an antibody that targets apolipoprotein A1, the coat protein of HDL.

The team developed a bovine TB biosensor test to address these issues. The sensor detects a biomarker that M. bovis secretes. The researchers created an immunoassay for direct detection of the TB bovine biomarker lipomannan (LM), in serum using a waveguide-based optical biosensor. The detector uses an ultrasensitive detection strategy that the Lab’s Biosensor team had developed to identify disease biomarkers in blood. The article also describes the change in bovine TB biomarkers at different stages of infection. This is the first time that the lipomannan biomarker has been directly detected in bovine blood.

The Los Alamos team has developed a test for TB in humans, which detects a blood biomarker called lipoaribinomannan (LAM). LAM is difficult to detect because it is a lipid, a molecule that does not blend with the watery blood and so eludes regular testing techniques. Naturally

occurring lipoproteins (e.g., HDL and LDL measured for cholesterol analysis) enabled the researchers to measure LAM in blood. To detect LAM, the Los Alamos team has developed a novel assay – lipoprotein capture – which exploits the interaction of LAM with host lipoproteins and can thereby detect it in blood. Combined with a highly sensitive waveguide-based optical biosensor for the rapid, sensitive and specific detection of pathogenic biomarkers, the team has effectively identified oily biomarkers associated with diseases like tuberculosis, food poisoning, leprosy and others. The researchers adapted the human assay test for TB to detect the analogous biomarker LM in cattle.

There are several novel aspects of the bovine TB dectection work: 1) it demonstrates that bovine LM is also associated with lipoproteins in cattle sera, which establishes a similarity of host-pathogen interaction in this species; 2) the method shows that unlike host biomarker studies, pathogen biomarker-based measurements can be used in human and animal hosts; and 3) it demonstrates for the first time the direct detection of bovine LM in serum, and shows that the biomarker is expressed in detectable concentrations during the entire course of the infection. Biomarker detection enables researchers to distinguish between simple exposure and active infection, something that the current skin test cannot do. The test is an important step in the direction of providing rapid, point-of-care diagnostics for bovine TB.

The work is an illustration of the global One Health strategy of developing diagnostics that are not host limited, which means that the diagnostics can be applied to other organisms. The One Health concept recognizes that the health of people is connected to the health of animals and the environment. The U.S. CDC uses a One Health approach by working with physicians, veterinarians, ecologists, and many others to monitor and control public health threats and to learn about how diseases spread among people, animals, and the environment.

Reference: “Detection of Lipomannan in Cattle Infected with Bovine Tuberculosis,” Analytical Sciences 33, 457 (2017); doi: 10.2116/analsci.33.457. Authors: Dung M. Vu, Rama M. Sakamuri, and Harshini Mukundan (Physical Chemistry and Applied Spectroscopy, C-PCS); W. Ray Waters (US Department of Agriculture); and Basil I. Swanson (Biosecurity and Public Health, B-10).

The biosensor work began as a Laboratory Directed Research and Development (LDRD) project and developed over years to a mature technology for detecting TB and other diseases in humans. The New Mexico Small Business Assistance (NMBSA) program funded the research to adapt the biosensor technology to detect bovine TB. Ranchers and veterinarians in the New Mexico supported this work via NMSBA. The work supports the Laboratory’s Global Security mission area and the Science of Signatures science pillar by providing innovative tools for disease surveillance and improving responses to emerging threats to health. Technical contact: Harshini Mukundan

Computer, Computational and Statistical Sciences

Combining graph and quantum theories to calculate electronic structure of systems

The editors of The Journal of Chemical Physics annually select a few articles that present significant and definitive research in experimental and theoretical areas of chemical physics. Lab researchers and a collaborator authored the paper, “Graph-based Linear Scaling Electronic Structure Theory,” which the editors selected in the area of Theoretical Methods and Algorithms.

The importance of electronic structure theory in materials science, chemistry, and molecular biology relies on the development of theoretical methods that provide sufficient accuracy at a reasonable computational cost. The immense promise of linear scaling electronic structure theory has never been fully realized due to some significant shortcomings: 1) the accuracy is reduced to a level that is often difficult, if not impossible, to control; 2) the computational pre-factor is high, and the linear scaling benefit occurs only for very large systems that in practice often are beyond acceptable time limits or available computer resources; and 3) the parallel performance is generally challenged by a significant overhead, and the wall-clock time remains high even with massive parallelism. All these problems coalesce in quantum-based molecular dynamics simulations, which constrain calculations to small system sizes or short simulation times.

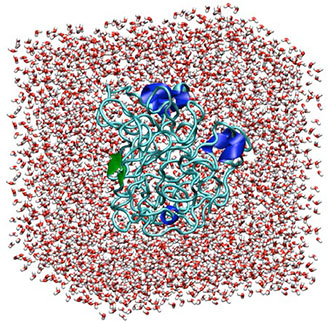

Figure 5. Polyalanine (2593 atoms) solvated in water with a total of 19,945 atoms. In the graphic, white represents hydrogen atoms and red represents oxygen in the solvating water molecules. Blue and green represent polyalanine.

The researchers used a test system for the analysis that is based on a 19,945 atoms system of polyalanine (2593 atoms) in liquid water. The team chose alanine because it is possibly the simplest chiral amino acid that enables the formation of stable secondary structures. Investigators can build models with this simple peptide that include linear, α-helix, and β-sheet polyalanine secondary structures, which introduce extra complexity to the system. These secondary structures and complexity are needed to test the graph-based electronic structure framework. The density of the final globular structure is around 0.7 g/ml, which is a reasonable value for globular proteins.

Reference: “Graph-based Linear Scaling Electronic Structure Theory,” The Journal of Chemical Physics 144, 234101 (2016); doi: 10.1063/1.4952650. Authors: Hristo Djidjev, Susan Mniszewski, and Michael Wall (Information Sciences, CCS-3); Nicolas Bock and Jamal Mohd-Yusof (Applied Computer Science, CCS-7); Marc Cawkwell, Timothy Germann, Christian Negre, and Anders Niklasson (Physics and Chemistry of Materials, T-1); Pieter Swart (Applied Mathematics and Plasma Physics, T-5); and Emanuel H. Rubensson (Uppsala University).

The DOE Office of Science, Office of Basic Energy Sciences funded the underlying theory, basic concepts, and simple initial proof. The Laboratory Directed Research and Development (LDRD) program sponsored the computer science and platform specific optimization using the graph partitioning tool. The research used resources from LANL’s Institutional Computing Program. The work supports the Lab’s Energy Security mission area and the Information, Science, and Technology and the Materials for the Future science pillars. Technical contact: Susan Mniszewski

Earth and Environmental Sciences

Using pressure to enhance the properties of lead-free hybrid perovskite

Perovskite solar cells, based on hybrid organic-inorganic halide perovskites, have potential energy applications due to their high power conversion efficiencies and low manufacturing costs. Lead-based organic-inorganic halide perovskite solar cells have achieved power conversion efficiencies of over 20%. However, some scientific and technical challenges remain for practical applications of the perovskite photovoltaic materials: 1) the lack of a fundamental understanding of the structure–property relationship, 2) their relatively low phase stability, and 3) the lead in the materials pose toxicity and environmental concerns. Los Alamos researchers and collaborators used high pressure to tune material structures and properties of an alternative material, a lead-free tin halide perovskite (CH3NH3SnI3). Their studies have revealed an increased electrical conductivity, improved structural stability, and enhanced light absorption in the pressure-treated perovskite. Advanced Materials published this work.

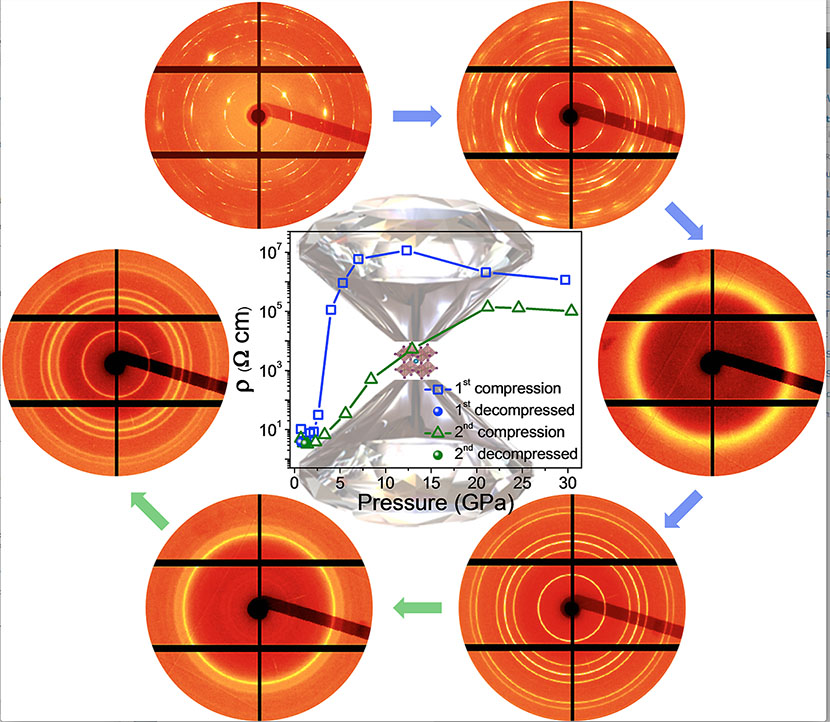

External pressure can provide a direct way to adjust interatomic distances and effectively tune the crystal structure and electronic properties. Therefore, the team conducted in-situ structural, electrical conductivity, and photocurrent measurements of CH3NH3SnI3 perovskite under high pressures (up to 30 GPa) using a diamond anvil cell. Through two subsequent compression-decompression cycles, the researchers found that the pressure-treated product exhibits better phase stability as well as improved electrical conductivity and visible light responsiveness. High-pressure synchrotron x-ray diffraction revealed that the hybrid perovskite undergoes pressure-induced amorphization on compression, followed by recrystallization upon decompression. Complementary electronic transport measurements demonstrated that the recovered low-pressure phase exhibits an increase in its electrical conductivity of over 300% compared with pristine samples. Moreover, the second pressure cycling resulted in increased structural stability without amorphization up to 30 GP. The team concluded that the enhanced structural stability and electronic properties are due at least in part to higher crystallographic symmetry, more uniform grain sizes, and microstructural modifications that cannot be easily achieved through conventional synthetic routes.

Figure 6. (Moving clockwise from top left): First pressure cycling (blue arrows) applied on an organotin halide-based perovskite leads to a pressure-induced amorphization and recrystallization; second pressure cycling (green arrows) reveals enhanced structural stability without amorphization up to 30 GPa in the pressure-treated perovskite.

Reference: “Enhanced Structural Stability and Photo Responsiveness of CH3NH3SnI3 Perovskite via Pressure-Induced Amorphization and Recrystallization,” Advanced Materials 28, 8663 (2016); doi: 10.1002/adma.201600771. Authors: Xujie Lü, Xiaofeng Guo, and Hongwu Xu (Earth System Observations, EES-14); Quanxi Jia (Center for Integrated Nanotechnologies, MPA-CINT); Yonggang Wang and Yusheng Zhao (University of Nevada – Las Vegas); Constantinos C. Stoumpos, Haijie Chen, and Mercouri G. Kanatzidis (Northwestern University); Qingyang Hu, Liuxiang Yang, Wenge Yang, and Jesse S. Smith (Carnegie Institution of Washington).

The Laboratory Directed Research and Development (LDRD) program funded the Los Alamos research. The work at Los Alamos National Laboratory was performed, in part, at the Center for Integrated Nanotechnologies (CINT), an Office of Science User Facility operated for the DOE, Office of Science. The research supports the Lab’s Energy Security mission area and the Materials for the Future science pillar through the development of materials for solar energy cells. Technical contacts: Xujie Lü and Hongwu XuMaterials Physics and Applications

Influence of dielectric environment on excitons in atomically thin semiconductors

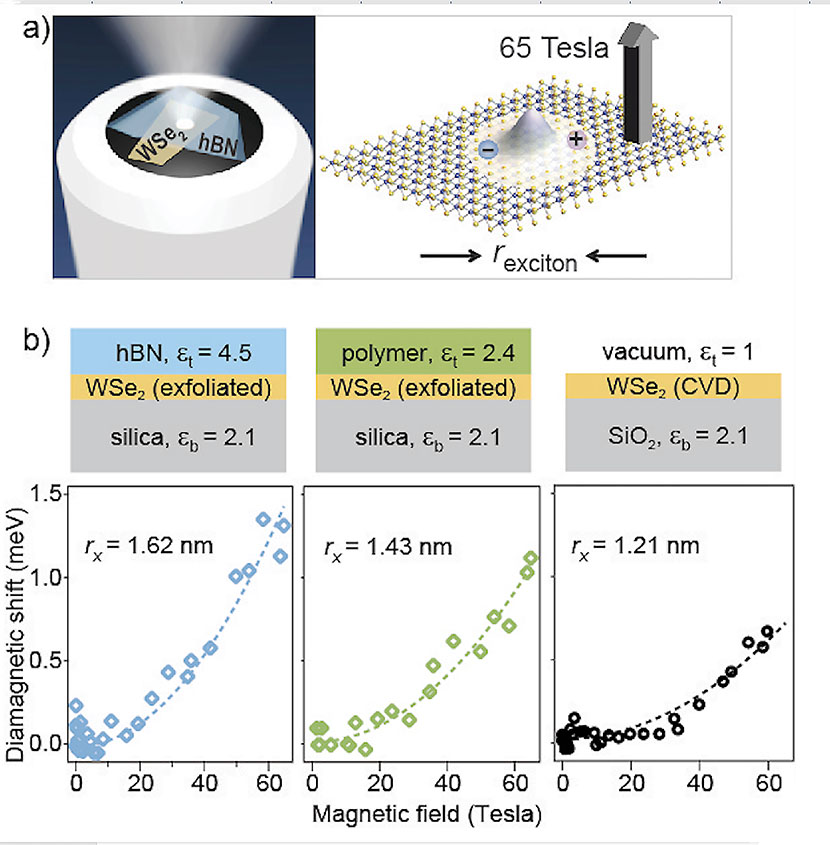

Los Alamos researchers and their collaborators have used the high magnetic fields generated at the National High Magnetic Field Laboratory’s Pulsed Field Facility at Los Alamos to directly measure how the properties of excitons (bound electron-hole pairs) in atomically thin semiconductors are influenced by the surrounding environment. Their results demonstrate how both the size and binding energy of excitons might be tuned in future 2D devices that are based on assembly of atomically thin semiconductors and other monolayer materials for optoelectronic and energy applications. Nano Letters published their findings.

Dielectric screening plays an essential role in semiconductor physics. It modifies the interactions between electronic carriers and therefore strongly impacts the transport phenomena and optoelectronic properties via its influence on both the size and binding energy of excitons. The excitons in the new family of atomically thin 2D semiconductors, such as molybdenum sulfide (MoS2) and tungsten selenide (WSe2), lie close to a surface. Therefore, their properties are expected to be strongly influenced by the surrounding dielectric environment. However, systematic studies exploring this role are challenging, in part because the most readily accessible exciton parameter – the exciton’s optical transition energy – is largely unaffected by the surrounding medium.

To overcome this barrier, the team exploited the fact that an exciton’s physical size is directly proportional to the small quadratic energy shift of the exciton’s optical transition in high magnetic fields – the “diamagnetic shift.” The researchers used exfoliated WSe2 monolayer flakes affixed to single-mode optical fibers. The investigators tuned the surrounding dielectric environment by encapsulating the flakes with different materials, and then performed polarized low temperature magneto-absorption studies to 65 T. Their results quantify for the first time the systematic increase of the exciton’s size (and concurrent reduction of exciton’s binding energy) as dielectric screening from the surrounding materials is increased. This insight demonstrates how exciton properties could be tuned in future 2D optoelectronic devices. Moreover, the experimental technique they developed could be broadly applicable to a wide variety of new and interesting 2D materials for studies of fundamental exciton and optical properties.

Figure 7. (a) Schematic: a single exfoliated crystal of monolayer WSe2 is transferred and positioned over the 3.5 μm diameter silica core of a single-mode optical fiber (not drawn to scale). The WSe2 flake is encapsulated with either hexagonal boron nitride (as depicted) or other material to tune the dielectric environment. The resulting assembly is physically robust and ensures that light passes only through the monolayer flake and does not move with respect to the flake, even in the cryogenic bore of a 65 T pulsed magnet. (b) Plots show the quadratic diamagnetic shift of the exciton transition, from which the exciton’s radius rX (and also its binding energy) can be inferred. The diamagnetic shift is measured for three different dielectric environments depicted by the diagrams.

Reference: “Probing the Influence of Dielectric Environment on Excitons in Monolayer WSe2: Insight from High Magnetic Fields,” Nano Letters, (2016); doi: 10.1021/acs.nanolett.6b03276.

Authors: Andreas V. Stier and Scott A. Crooker (Condensed Matter and Magnet Science, MPA-CMMS), Nathan P. Wilson, Genevieve Clark, and Xiaodong Xu (University of Washington). Billy Vigil (MPA-CMMS) supported the project by building one of the high-field probes.

The research benefitted from the use of the 65 T capacitor-driven pulsed magnets at the National High Magnetic Field Laboratory, which the National Science Foundation (NSF) and the state of Florida fund. The work supports the Lab’s Energy Security mission area and Materials for the Future and Science of Signatures science pillars through the development of methods to investigate the properties of 2D materials for semiconductors and other energy applications. Technical contact: Scott Crooker

Materials Science and Technology

Coupled experimental and computational studies enable materials damage prediction

A Los Alamos materials scientist, theorist, and statistical scientist authored an overview of the Laboratory’s research to develop a coupled experimental and computational methodology to predict materials damage. The article, by Veronica Livescu (Materials Science in Radiation and Dynamics Extremes, MST-8), Curt Bronkhorst (Fluid Dynamics and Solid Mechanics, T-3), and Scott Vander Wiel (Statistical Sciences, CCS-6), appeared in Advanced Materials & Processing.

Accurate description of materials often involves the characterization of many variables that can only be captured in a statistical sense, e.g., chemical composition, defect concentration, texture, or grain size. These parameters and their linking across temporal and spatial scales determine a material’s behavior and performance in a given environment. Researchers predict and control functionality through understanding the role of defects and interfaces and their behavior under extreme environments.

Predicting macroscale failure in polycrystalline metallic materials is challenging due to the complex physical processes involved and a lack of models that consider microstructural effects. The Laboratory team aims to discover reliable phenomenological connections relating the time – evolving spatial distribution of stress hot spots to loading conditions, material properties, and spatial distributions of grain morphology and texture. This information could lead to better simulation models to predict macroscale behavior of materials under extreme loadings and, eventually, to a principled understanding of how these materials fail when subjected to shock.

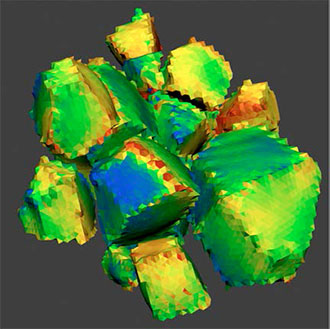

Los Alamos researchers are coupling experimental and computational methodology to improve predictive damage abilities. The Laboratory uses open-source software to create an unlimited supply of 3D synthetic microstructures statistically similar to the original material. The team can export the synthetic microstructures for use in a variety of models and simulations, enabling comparisons to be drawn. Turning materials into digitally generated microstructures allows scientists to study the actual distributions of microstructural features. Moreover, structure-property information can help link microstructural details to damage evolution under various loading states. The publication reported examples of this technique for investigating ductile damage and evaluating a material’s response to loading.

The Laboratory team performed both the experimental and the computational components of the work. The experimental work included: 1) microstructural and statistical characterization of materials and generation of digital microstructures, and 2) mechanical testing to examine incipient damage from dynamic loading of materials. The investigators developed a rate-dependent macro-scale damage model and performed polycrystal simulations of materials under loading trajectories that match those in experiments. These simulations provided first order quantitative estimates of the von Mises stress (metric of measurement to determine if the structure has started to yield at any point) on grain surfaces in the polycrystal from simulations on statistically representative digital microstructures.

Figure 8. An expanded view of simulated von Mises stress (used to predict yielding of materials under a loading condition) of hot spots on grain surfaces in polycrystalline tantalum.

The von Mises stress is often used to determine whether an isotropic and ductile metal will yield when subjected to a complex loading condition. In this research, plotting the von Mises stress and identifying hot spots (stress peaks) helped pinpoint when in time and where in microstructural space damage will nucleate. The investigators used this information to predict damage development under specific loading conditions. The team expects that this method could lead to more well-founded simulation models to predict macro-scale behavior of materials under extreme loadings and, eventually, to a principled understanding of how these materials fail when subjected to shock.

The authors conclude that this method provides an unlimited supply of digital microstructures, which are statistically representative of the original material. Synthetic volumes are surface meshed to enable moving models into finite element methods (FEM) or other simulations. Interpretation of physical behavior is performed by comparing experimental to calculate free surface velocity responses by including hypothesized mechanisms within the theoretical model used to conduct the simulations.

Reference: “3D Microstructures for Materials and Damage Models,” Advanced Materials & Processing 175, February/March 2017 p. 16. Authors: Veronica Livescu (Materials Science in Radiation and Damage Extremes, MST-8), Curt Bronkhorst (Fluid Dynamics and Solid Mechanics, T-3) and Scott Vander Wiel (Statistical Sciences, CCS-6).

The Joint DoD/DOE Munitions Technology Development Program and the NNSA Science Campaign 2 funded the work, which supports the Lab’s Nuclear Deterrence and Global Security mission areas and Materials for the Future science pillar by paving the path towards predicting and controlling materials’ performance and functionality. Technical contact: Veronica Livescu

Physics

Pulse dilation photomultiplier tube improves temporal resolution

Los Alamos researchers and their international collaborators have completed a proof-of-concept experiment on a Pulse Dilation Photomultiplier Tube (PD-PMT) prototype intended to supplant traditional PMTs in applications where faster signal recording than is currently achievable is required. The team is developing the PD-PMT to take better advantage of gas Cherenkov detector measurements of internal confinement fusion (ICF) gamma ray signals. The resulting highly resolved carbon areal density and fusion reaction histories will better inform High Energy Density Physics (HEDP) experiments and the quest for thermonuclear ignition at the National Ignition Facility (NIF) located at Lawrence Livermore National Laboratory.

Gas Cherenkov detectors, such as the Gamma Reaction History diagnostic, have inherent temporal dispersion on the order of only approximately 10 ps. This fast time response is essentially squandered in coupling the optical Cherenkov signal to the current state-of-the-art PMTs, which are only capable of approximately 100 ps resolution. Although today’s streak cameras are capable of less than 10 ps resolution, they are not suitable for identifying small signals in high-radiation environments, and are not particularly efficient in applications where 1D spatial imaging is not required.

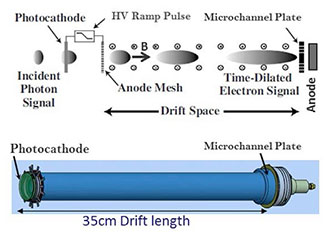

Figure 9. (Top): Pulse Dilation PMT (PD-PMT) scheme depicting the dilation of a transient photoelectron signal bunch down an elongated drift region in order to enhance temporal resolution of high frequency signal components. (Bottom): Isometric view of the tube prior to packaging.

The PD-PMT scheme allows a ramped-down accelerating voltage at the photocathode during the period of signal-of-interest to cause a velocity differential in the photoelectron signal bunch, where earlier electrons travel faster than later electrons. This results in an axial dispersal or “pulse dilation” of the signal as it passes down a drift tube section containing an axial magnetic field to confine the electron bunch.

Temporal magnification on the order of more than a factor of 20 is feasible, yielding enhanced time resolution at the anode with respect to the electrical recording system. A microchannel plate at the end of the drift section provides signal gain. The drift tube section of the prototype is approximately 35 cm long. PD-PMT is similar to a streak camera sans 1D imaging capability where the transient signal is streaked axially instead of transversely. The absence of imaging allows the radiation sensitive components, i.e., phosphor and CCD camera, to be removed, enabling extremely high bandwidth measurements to be made in a high radiation environment.

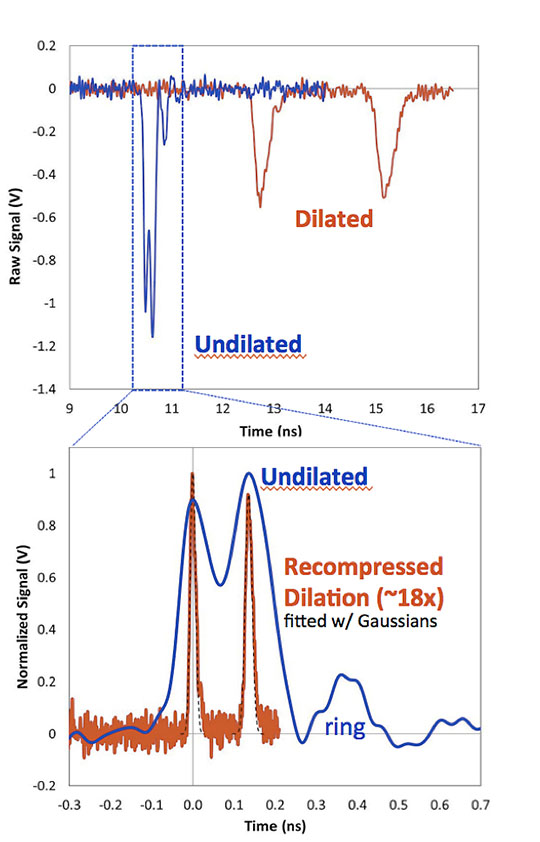

Figure 10. (Upper plot): Raw data from the PDPMT without (blue) and with (red) dilation. (Bottom plot): Dilated pulses with a recompressed time scale to match the peak spacing of the undilated signal, demonstrating a temporal magnification of 17.9x (both signals are normalized to a peak height of 1).

The primary test was resolution of double ps-scale laser pulses where the duration between pulses was controlled by mirror spacing in a Michelson interferometer. The upper plot in Figure 10 shows the raw data from a double pulse with a time between pulses of 135 ps. The blue “undilated” trace is taken with the PD-PMT operated in dc mode as a standard PMT. The double pulse is just barely resolvable. Detector ringing due to the short transient nature of the signal is pronounced, reducing signal quality. The red “dilated” trace is a measurement of the same double pulse during the dilation ramp. The stretching of the signal at the anode results in near elimination of ringing and improved signal quality. In the lower plot the dilated signal is recompressed, by approximately a factor of 18 in time, to match the undilated peak spacing, resulting in a highly resolved double peak structure. Each recompressed peak is fit with a Gaussian (dashed black line) having full width half maximum on the order of 20 ps. By reducing the mirror spacing, the team determined that resolution of the dilated pulses is possible down to approximately 10 ps between pulses.

Hans Herrmann (Plasma Physics, P-24) oversaw the experiments, which were conducted in the United Kingdom at the ORION Laser Facility located at the Atomic Weapons Establishment (AWE) in collaboration with AWE scientists Colin Horsfield and Steve Gales, as well as the commercial developers (Sydor Technologies subsidiaries Photek and Kentech Instruments) of this new technology. The Los Alamos team incorporating the PD-PMT into the gas Cherenkov detector at the National Ignition Facility included Hans Herrmann, Frank Lopez, Yongho Kim, Alex Zylstra, Kevin Meaney, and Hermann Geppert-Kleinrath (P-24) working in collaboration with Lawrence Livermore National Laboratory employees. NNSA Science Campaign 10 (Inertial Confinement Fusion) funded the work, which supports the Laboratory’s Nuclear Deterrence mission area and the Nuclear and Particle Futures and Science of Signatures science pillars. Technical contact: Hans Herrmann