STE Highlights, May 2020

Awards and Recognition

Analytics, Intelligence and Technology

Another D-Wave advantage: High-quality solutions to tricky combinatorial optimization problems

Earth and Environmental Sciences

Protecting the global food chain: Best predictions of El Niño impacts

Awards and Recognition

LANL teams place in ARPA-E Grid Optimization Competition

Three Los Alamos teams entered and placed in the top 10 of the Department of Energy’s Advanced Research Projects Agency-Energy (ARPA-E) Grid Optimization Competition.

The top 10 winners were announced by U.S. Energy Secretary Dan Brouillette. The winners shared a total of $3.4 million, which is to be used to further develop their respective approaches and pursue industry adoption of their technologies.

The LANL project GO-SNIP won second place in the competition. This project was led by Andreas Waechter, who is currently on sabbatical at LANL in the Grid Optimization Group. The LANL project Gravityx won fourth place. This project consisted of a team of LANL staff who participated as individuals (Nathan Lemons and Hassan Lionel Hijazi), both from the Grid Optimization Group. And the LANL project ARPA-E Benchmark secured 10th place. Carleton Coffrin led this project, whose solution is now open sourced and available on GitHub.

This was the first of a series of challenges to develop software management solutions for challenging power grid problems. The competition’s intent is to create a more reliable, resilient, and secure American electricity grid. Preparations are currently underway for challenge 2, which will build on the models used in challenge 1.

Watch a video: ARPA-E Announces the Winners of Round 1 of the GO Competition

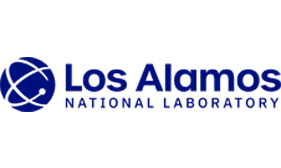

Cincio wins first place in IBM Quantum Challenge

Łukasz Cincio

Łukasz Cincio of the Physics of Condensed Matter and Complex Systems (T-4) Group in the Los Alamos Theoretical Division won first place in the May 2020 IBM Quantum Challenge. In its fourth year, the IBM Quantum Experience put forth four quantum programming exercises to be solved in four days.

Cincio placed first out of 1,745 participants. For the most challenging of the four exercises, Cincio’s result bested the result discovered by IBM's experts—offering a solution even they didn’t think was possible.

Cincio achieved his top-performing result by using a specific machine learning algorithm that he created as part of a Laboratory Directed Research and Development (LDRD) project. It was a substantial victory for the entire project team because it demonstrated the power and credibility of the software, which removes the burden from a human and automates the problem-solving instead.

IBM Quantum Challenge

“The technique that I created as part of the LDRD came out on top, which I find very reassuring that the work we do is of high quality,” said Cincio. “As typically happens with machine learning, the most optimal solution to the problem was very unintuitive and surprising, even to the organizers, whose solution was slightly less optimal.”

LDRD team members included Scott Pakin, Wojciech Zurek, Nikolai Sinitsyn, Andrew Arrasmith, Patrick Coles, Hristo Djidjev, Andrew Sornborger, Petr Anisimov, Avadh Saxena, Yigit Subasi, Stephan Eidenbenz, Lukasz Cincio, Rolando Somma, Tyler Volkoff, and Akira Sone.

“I had a lot of fun participating in the IBM Challenge,” Cincio said. “It was a real-time competition—the best score kept changing as the challenge was ongoing and new participants were joining in. We don’t have much of this in our everyday work.”

Analytics, Intelligence and Technology

Another D-Wave advantage: High-quality solutions to tricky combinatorial optimization problems

A recent study is helping to identify the types of optimization problems that are solved faster via a D-Wave computer than with classical computing approaches. The work is establishing a foundation for when to use a quantum annealer, when to use a classical CPU, and when to try hybrid algorithms.

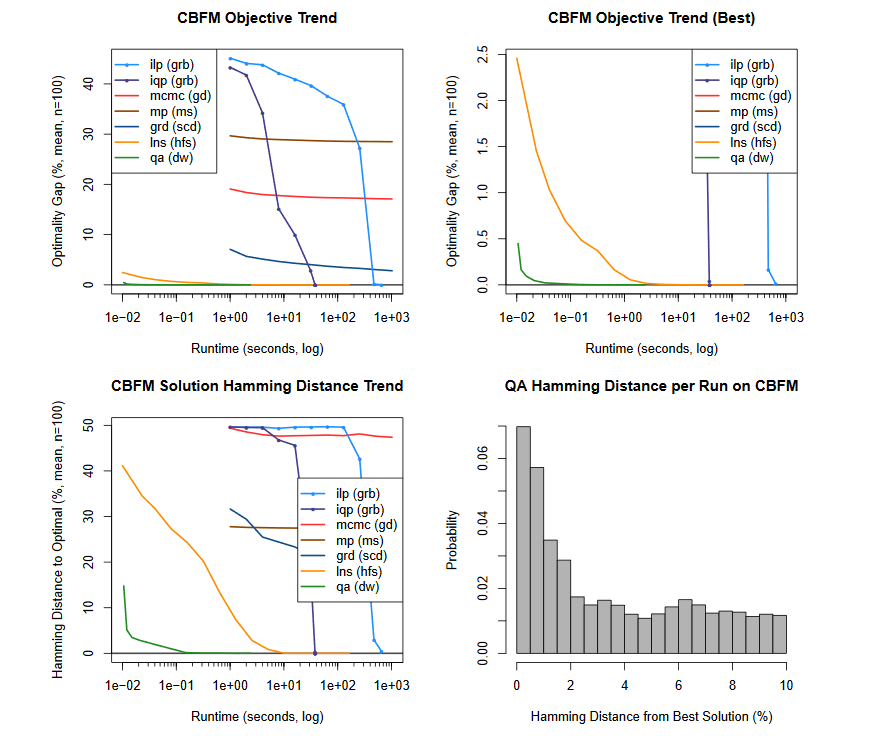

Performance profile (top) and hamming distance (bottom) analysis for the corrupted biased ferromagnet instance. D-Wave has a significant probability (> 0.12) of returning a solution that has a hamming distance of less than 1% from the global optimal solution.

The study Los Alamos researchers in Information Systems and Modeling (A-1), along with a collaborator at the University of Illinois Urbana-Champaign who joined the project through LANL’s Quantum Computing Summer School, asked how well Los Alamos’ D-Wave computer—a state-of-the-art quantum annealing device—could solve different types of ferromagnetic problems. Such problems are often used in condensed matter physics as proxies for understanding the behavior of magnetic materials.

The new insight of this recent study is that different types of ferromagnetic problems can also result in particularly tricky mathematical optimization problems, which present a considerable computational challenge that is comparable to finding needle in a haystack; that is, searching for the best solution among an exponential number of possible solutions.

With more than 2,000 optimization variables, the study compared the D-Wave system to both heuristic and complete search algorithms (the established classical computing methods for solving optimization problems). The D-Wave system demonstrated significant gains in terms of runtime (speed) and identifying the structure of the optimal solution, which is a big hint for where the needle is located in the haystack.

“This work provides new insights into the computation properties of quantum annealing and suggests that this model has an uncanny ability to avoid local minima and quickly identify the structure of high-quality solutions,” says Carleton Coffrin, lead researcher for the study. “This work also prompts the development of hybrid algorithms that combine the best features of quantum annealing and state-of-the-art classical approaches.”

Hardware for problem solving

D-Wave is a type of hardware used for computing. It is one of several methods being explored as a solution to the problem of scaling up mission-critical computational technologies. Some of the most challenging mathematical problems require more sophisticated and novel hardware.

Thus far, Los Alamos researchers have illustrated that D-Wave is successful in solving some types of quadratic unconstrained binary optimizations (QUBO) and Ising models. The current study expands on that knowledge to include new problem classes, represented as biased ferromagnet, frustrated biased ferromagnet, and corrupted biased ferromagnet. The analysis of the corrupted biased ferromagnet is particularly exciting as it represents a significant performance gain (100 times faster) for challenging optimization problems.

Funding and mission

This research was funded by a Los Alamos Laboratory Directed Research and Development award. The work supports the Laboratory’s Global Security mission area and the Integrating Information, Science, and Technology for Prediction capability pillar.

Reference: Yuchen Pang (U of I at Urbana-Champaign), Carleton Coffrin, Andrey Y. Lokhov, Marc Vuffray. “The Potential of Quantum Annealing for Rapid Solution Structure Identification.” Accepted April 2020. https://arxiv.org/abs/1912.01759

Videos:

- "Science First" - The best path to quantum supremacy

- The Potential of Quantum Annealing for Rapid Solution Structure Identification

Technical contact: Carleton Coffrin

Chemistry

New quantum dots for toxic-element-free, next-gen solar cells

The solar cell is often thought of as a “green and clean” energy source, but some of the current solar cell technologies rely on toxic materials such as lead and cadmium, inhibiting solar cell scalability, disposability, and usefulness. But a recent breakthrough by Los Alamos researchers is changing the fate of solar cell feasibility: the researchers created novel quantum dots (nanoscale semiconductors) from nontoxic materials: zinc, copper, indium, and selenium.

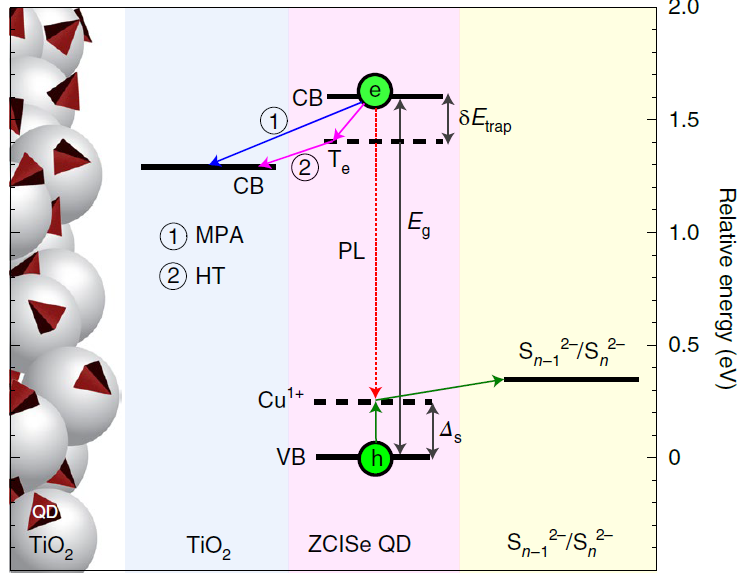

An illustration and energy diagram of the photoconversion via non-toxic quantum dots (ZCISe QD).

These new quantum dots are not only safer for people and the environment, but they are also inexpensive and high performing.

“We achieved consistent photovoltaic performance with nearly 85 percent photon-to-electron conversion efficiencies and highly reproducible power conversion efficiencies of 9 to 10 percent,” , said Victor Klimov of the Physical Chemistry and Applied Spectroscopy Group (C-PCS). That’s a performance efficiency on par with existing quantum dot solar cell technologies, but without the highly toxic heavy metals.

This research provides the foundation for large-scale, widespread use of inexpensive, solution-processable solar cells. This significant milestone for energy technology was featured on the May cover of Nature Energy.

The mechanism of quantum dot solar cells

The goal of the solar cell is to convert sunlight, which is made of photons, into usable energy, such as electrons. Therefore, the higher the conversion rate, the greater the efficiency of the solar cell. Klimov and his team were able to achieve a photon-to-electron conversion rate of 85 percent, which is considered to be nearly perfect.

This high conversion efficiency was a pleasant surprise considering the new quantum dots had many defects.

“Due to their very complex composition in which four elements are combined in the same nanosized particle, these dots are prone to defects,” Klimov said. Yet, instead of decreasing photoconversion, the defects actually helped photoconversion.

These “functional” defects were identified as shallow surface-located electron traps and native Cu-related hole-trapping centers. The term defect in this situation refers to the imperfect crystal lattice, not an imperfect outcome. On the contrary, the outcome was improved because the defects assisted both electron transfer to the electrode and hole reduction by the electrolyte.

It may be possible to boost conversion to a higher rate still. This could be achieved by focusing on optimization of the cathode/electrolyte system, given that the photoanode of the device was noted as almost fully optimized already.

Greener and cleaner solar cells made with the nontoxic quantum dots.

Further applications of quantum dots

Quantum dots have already found many uses, and more are coming. In particular, they are very efficient light emitters. They are distinct from other types of light-emitting materials, as their color is not fixed and can be easily tuned by adjusting the quantum dot size. This property has been utilized in displays and televisions, and soon will help make more efficient, color-adjustable light bulbs.

Unique properties of quantum dots have also been exploited in applications of direct relevance to national security, including high-sensitivity infrared photodetectors, single-photon sources for secure communication, high-performance photocathodes for accelerators, multifunctional radiation detectors, and many others.

The non-toxic aspect of these new quantum dots will help further push the boundaries of the technology as well as the applications.

Funding and mission

The studies of quantum dot photophysical properties and charge transfer at quantum dot interfaces were supported by the Office of Science, U.S. Department of Energy. The research into quantum dot synthesis and device fabrication was funded by a Laboratory Directed Research and Development award. This work supports the Laboratory’s Energy Security mission area and the Materials for the Future capability pillar.

Reference: Jun Du, Rohan Singh, Igor Fedin, Addis Fuhr, and Victor Klimov. “Spectroscopic insights into high defect tolerance of Zn:CuInSe2 quantum-dot-sensitized solar cells.” Nature Energy. May 2020. DOI: 10.1038/s41560-020-0617-6

Technical contact: Victor I. Klimov

Earth and Environmental Sciences

Protecting the global food chain: Best predictions of El Niño impacts

El Niño is a complex part of the climate system that results from periodic cycles in near-surface ocean temperatures. It is considered the warm half of the ocean temperature cycle, whereas La Niña is considered the cold half. Scientists have documented El Niño events on average every three to five years, with a super El Niño event (the most extreme version) occurring every 15 to 20 years. But computer models cannot yet accurately predict the impacts these events will have on water supplies and vegetation for all areas, such as massive plant die-offs, moisture content of soil, and agricultural crop growth, leaving many local farmers and economies guessing and unprepared.

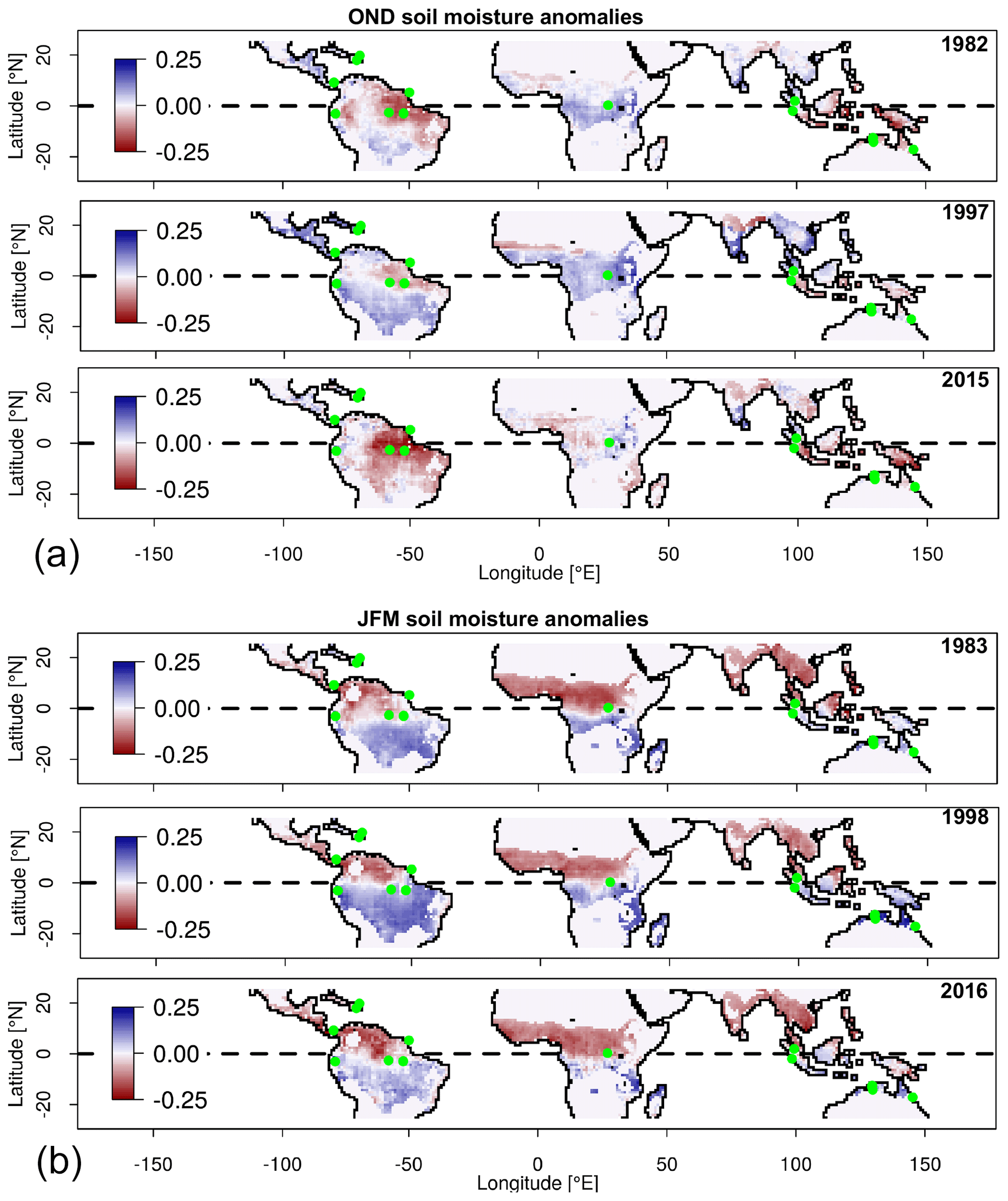

Soil moisture anomalies during super El Niño years during the months of October, November, December (OND) or January, February, March (JFM).

Los Alamos researchers recently led a scientific collaboration that asked the question, “How can we better understand the climate anomalies during an El Niño event and its impacts on soil moisture?” To better predict future impacts, the researchers needed to look at previous data and build models with better predictive power. They dove into data from the Global Land Data Assimilation System (GLDAS) from the three most recent super El Niño events (1982–1983, 1997–1998, and 2015–2016) to find some answers.

“Our results can be used to improve estimates of spatiotemporal differences in El Niño impacts on soil moisture in tropical hydrology and ecosystem models at multiple scales” said Kurt Solander, Los Alamos Computational Earth Science (EES-16) hydrologist.

The researchers noted consistent differences in soil moisture in different areas. For example, consistent decreases in soil moisture were seen in the Amazon basin and maritime southeastern Asia. The most consistent increases were seen over eastern Africa. In some cases, an increase in precipitation did not in fact lead to an increase in soil moisture because of other factors at play, such as atmospheric processes that originated outside of the region. Their methods allowed for a spatially continuous estimate across regions as well as an assessment of soil moisture across the peak El Niño season of October to March.

El Niño influences weather globally

El Niño events are often associated with droughts in some of the world’s more vulnerable tropical regions, but these events also influence global weather patterns. Gaining a better understanding and predictive power of these events is essential to offering insights that could help farmers and water managers in tropical regions to prepare for impacts on crops, local economies, and global food chains.

“This new study drills down to reveal how El Niño can affect the moisture content of soil, which controls the growth of plants, the food we eat, and how much water from land gets fed back into the atmosphere through evaporation,” Solander said.

As more countries have become interdependent on food exports for their economy and imports for feeding populations, ensuring the success of crop growth has become a global issue.

“With this new information,” Solander said, “water managers in these areas can, for example, regulate how much water they retain in a reservoir to compensate for the expected decreases in available moisture for local agricultural crops.”

By looking at the last three super El Niño events, it can be predicted that the northern Amazon basin and the maritime regions of southeastern Asia, Indonesia, and New Guinea will experience large reductions in soil moisture during the next super El Niño.

However, plants do more than just offer food. Plants can help moderate global warming through storing carbon and influence the transport of water to different regions through the process of precipitation recycling. Therefore, the next step of this research will be to gain insight into atmospheric moisture feedbacks from vegetation as plants adjust to climatic warming, which in turn helps researchers understand how precipitation will change on a global scale.

Funding and mission

This project was supported as part of the Next-Generation Ecosystem Experiments–Tropics, funded by the Office of Biological and Environmental Research in the U.S. Department of Energy’s Office of Science, through the Terrestrial Ecosystem Science program. The work supports the Laboratory’s Global Security mission area and the Integrating Information, Science, and Technology for Prediction and the Complex Natural and Engineered Systems capability pillars.

Reference: Kurt C. Solander, Brent D. Newman, Alessandro Carioca de Araujo (Brazilian Agricultural Research Corporation), Holly R. Barnard (University of Colorado Boulder), Z. Carter Berry (Chapman University), Damien Bonal (Institut National de la Recherche Agronomique), Mario Bretfeld (Kennesaw State University), Benoit Burban (Institut National de Recherche en Agriculture), Luiz Antonio Candido (National Institute for Amazonia Research), Rolando Célleri (University of Cuenca), Jeffery Q. Chambers (Lawrence Berkeley National Laboratory), Bradley O. Christoffersen (University of Texas Rio Grande Valley), Matteo Detto (Princeton University; Smithsonian Tropical Research Institute), Wouter A. Dorigo (TU Wien), Brent E. Ewers (University of Wyoming), Savio José Filgueiras Ferreira, Alexander Knohl (University of Göttingen), L. Ruby Leung (Pacific Northwest National Laboratory), Nate G. McDowell, Gretchen R. Miller (Texas A&M University), Maria Terezinha Ferreira Monteiro, Georgianne W. Moore, Robinson Negron-Juarez, Scott R. Saleska (University of Arizona), Christian Stiegler, Javier Tomasella (National Centre for Monitoring and Early Warning of Natural Disasters), and Chonggang Xu. “The pantropical response of soil moisture to El Niño.” Hydrol. Earth Syst. Sci., 24, 2303–2322, 2020. https://doi.org/10.5194/hess-24-2303-2020

Technical contact: Kurt Solander

Infrastructure Enhancement

Los Alamos exascale-class computing cooling infrastructure certified

Exascale is the next frontier in computing and the key to solving some of the world’s most pressing scientific questions. Clean energy production, nuclear reactor lifetime extension, and nuclear stockpile aging will be some of the areas tackled at no fewer than a billion billion calculations per second (1018). Although much is made of processor speed, Los Alamos has been in the business of high-performance computing since its origins in the Manhattan Project and understands that well-balanced, efficient computing is the key to computational science breakthroughs it’s known for.

The infrastructure includes warm-water cooling—a key component to computing at the exascale.

Balance and efficiency extend to the power and cooling infrastructure that support supercomputing. Putting efficient cooling infrastructure in place is a first step to enabling computing at the exascale and beyond. Los Alamos is taking a large step toward ensuring that cooling is ready for future supercomputing with its recent build and certification of a new exascale-class computing cooling infrastructure, a capital project.

Construction of the cooling infrastructure was completed in March, with commissioning and transition to operations in April, and project closeout in early May. The project, funded by the Advanced Strategic Computing program, was managed by the Project Management Division and received significant support from data center experts in the High Performance Computing (HPC) Division. The project was executed in a fully functioning data center and therefore could not interrupt the ongoing computational mission of the Laboratory. Nevertheless, it was completed 10 months ahead of schedule and over $20 million under budget. The infrastructure serves the Nicholas C. Metropolis Center for Modeling and Simulation, where a large portion of Los Alamos and collaborator computing takes place.

Why water cooling?

Supercomputing centers get hot, and the systems require constant cooling. This is an energy-intensive process that when using chilled-air cooling can sap the facility of 40 percent or more of its available power. As systems get bigger and the heat-producing processors in them become more dense, a more efficient and economical cooling method is required.

Water can carry more heat than air and can be moved more efficiently around a data center, resulting in much lower costs for equivalent cooling. That’s why the recently completed cooling project at Los Alamos uses water. The infrastructure adds five cooling towers and delivers water to the data center floor, where it can be easily distributed to future supercomputers or to the chips themselves (if necessary) to carry heat away and allow the system to perform at its peak.

While most data centers remove heat by evaporating potable water, Los Alamos utilizes reclaimed water. The Sanitary Effluent Reclamation Facility (SERF) processes water from the waste treatment plant and sends it to the Metropolis Center and cooling infrastructure. In addition to evaporating water unsuitable for other uses, SERF water has fewer particulates than Los Alamos groundwater and can stay in the cooling towers longer.

The next frontier in computing

This new cooling capability will support future world-class high-performance computer systems at LANL. The first of these systems will be Crossroads in late 2021. However, exascale computing for Los Alamos is not simply focused on attaining supercomputers with more operations per second. The goal is to provide breakthrough solutions that address the nation’s most critical challenges in scientific discovery, energy assurance, economic competitiveness, and national security.

Balanced platforms, hardware, and software specific to this goal will also be required and are being simultaneously investigated. Crossroads will include a number of major advances in computing technologies for simulating very complex physical systems. For future systems, Los Alamos founded a consortium to help overcome challenges associated with achieving efficient, effective supercomputing. The Efficient Mission-Centric Computing Consortium (EMC3) is open to vendors who sell and produce HPC as well as user organizations looking to collaborate on solving the most difficult problems to be overcome in order to serve members’ respective missions. At Los Alamos, these supercomputing systems will be used to modernize the nuclear deterrent and to understand the effects of aging in the deployed stockpile.

Funding and mission

The cooling infrastructure project was funded by the Advanced Simulation and Computing (ASC) Program. The facility supports the Laboratory’s Weapons Systems and Integrating Information, Science, and Technology for Prediction.

Technical contact: Randal Rheinheimer

Materials Science and Technology

Accurate simulations boost nanomaterial understanding

Hybrid and composite materials offer new and improved properties for pushing the boundaries of science and technology. However, understanding atomic-level details of interfaces in these materials—areas where different materials meet—remains somewhat elusive. In particular, complex oxide heterostructures are finding widespread application in nanoscale technologies, but their complex interface structures, which harbor extended defects such as misfit dislocations, are not well characterized.

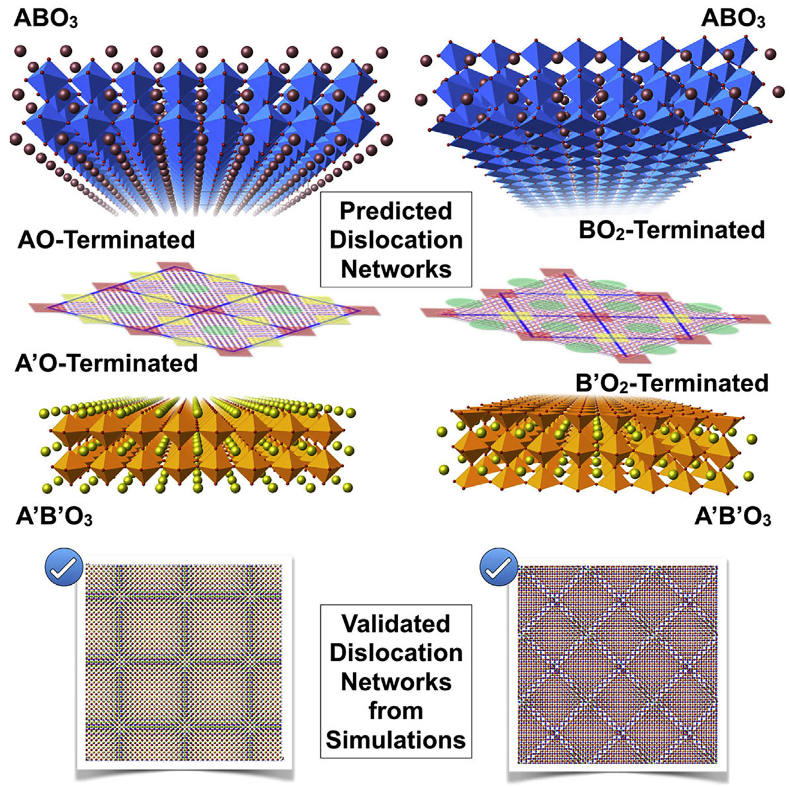

A graphical example illustrating predicted and validated representative dislocation network structures at perovskite–perovskite oxide semicoherent heterointerfaces.

To better understand these oxide materials, Los Alamos researchers from the Material Science and Technology (MST) Division along with a collaborator from the Rochester Institute of Technology simulated a number of chemistry-dependent interfaces for different oxide materials at the atomic level.

The researchers used these simulated results to develop a new approach that can reliably identify misfit dislocation-containing interface structures in oxide heterointerfaces without any need of full-scale, computationally expensive atomistic simulations. Their model can be extended to other materials as well. Given that studying interfaces experimentally can be daunting due to buried interfaces and other issues, this verified method offers widespread value in the field of material science.

“Misfit dislocations at interfaces dictate a myriad of properties, including strength, conductivity, and potential for radiation tolerance,” said Ghanshyam Pilania of the Materials Science in Radiation & Dynamics Extremes Group (MST-8). “Quickly identifying the nature of these dislocations aids both computation and experiment in understanding these ubiquitous structures.”

Understanding interfaces leads to material understanding

Scientists research interfaces because these areas determine so much of the material’s properties. Understanding interfaces leads to understanding of the material, which is why Nobel laureate Herbert Kroemer famously said, ‘‘The interface is the device.’’

That’s precisely why these researchers took to establishing a map between the structures and chemistry at the oxide interfaces. In these oxides, the materials come together in a mismatched way, which is called a misfit dislocation structure. The researchers showed that local bonding and elastic and electrostatic interactions across the interfaces are responsible for forming the diverse interface structures. They wanted to avoid explicit atomistic simulations that depend on orientation and termination-chemistry-dependent local atomic interactions because these typically take a very long time and demand heavy use of computational resources for accurate predictions.

Their resulting model was able to semi-quantitatively predict misfit dislocation network structures in perovskite–perovskite and perovskite–rock salt oxide materials, which are predominantly ionic in nature. However, the general ideas of the model can be useful in predicting structures in other materials that are more complex, exhibiting covalent or mixed-nature bonding.

The novel approach is rapid, efficient, and accurate. It can aid in understanding and interpreting experimental studies. Development of such models allows for efficient prediction of the underlying atomic structure (and thereby the associated properties) needed to establish design principles for advanced and new materials that are functionality relevant to Laboratory missions.

Funding and mission

This research was supported by the U.S. Department of Energy, Office of Science, Basic Energy Sciences, Materials Sciences and Engineering Division and by the Rochester Institute of Technology College of Science. This research used computational resources provided by the Los Alamos National Laboratory Institutional Computing Program. The work supports the Laboratory’s Energy Security mission area and the Materials for the Future capability pillar.

Reference: Ghanshyam Pilania, Pratik P. Dholabhai (Rochester Institute of Technology), Blas P. Uberuaga. “Role of Symmetry, Geometry, and Termination Chemistry on Misfit Dislocation Patterns at Semicoherent Heterointerfaces.” Matter 2, 1324–1337, May 6, 2020. https://doi.org/10.1016/j.matt.2020.03.009

Technical contact: Ghanshyam Pilania